-

-

Notifications

You must be signed in to change notification settings - Fork 7

Running Benchmark

In this wiki, we will create a project, benchmark, and start a run for it.

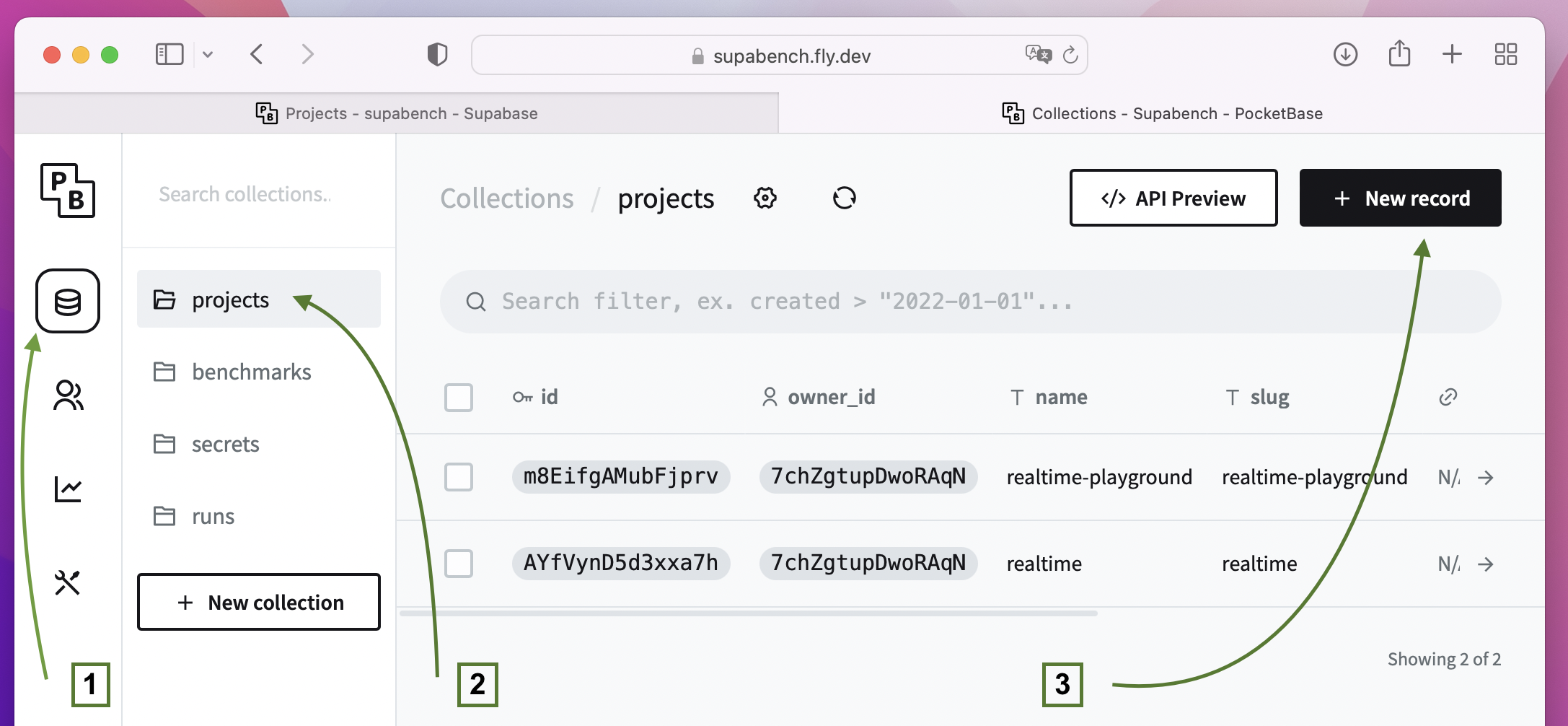

Create a project in the Supabench Admin UI. Navigate to your.supabench.url/_ and log in under your admin credentials.

Go to Collections > Projects and hit a New record button.

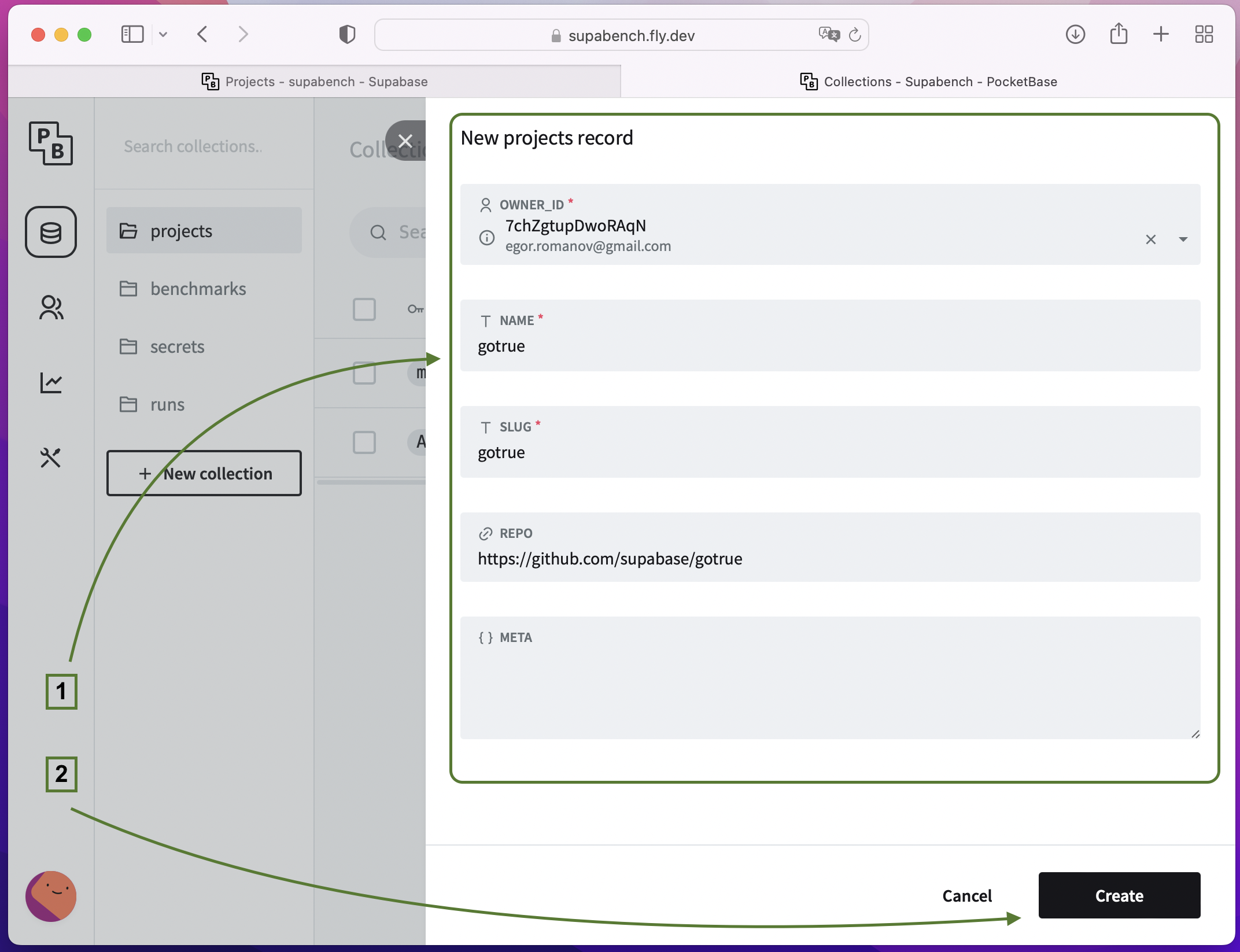

Projects are the top-level unit of Supabench. They are the software unit that is being tested. This may be a microservice, service, app, or some library. Let's create a project to test Gotrue service.

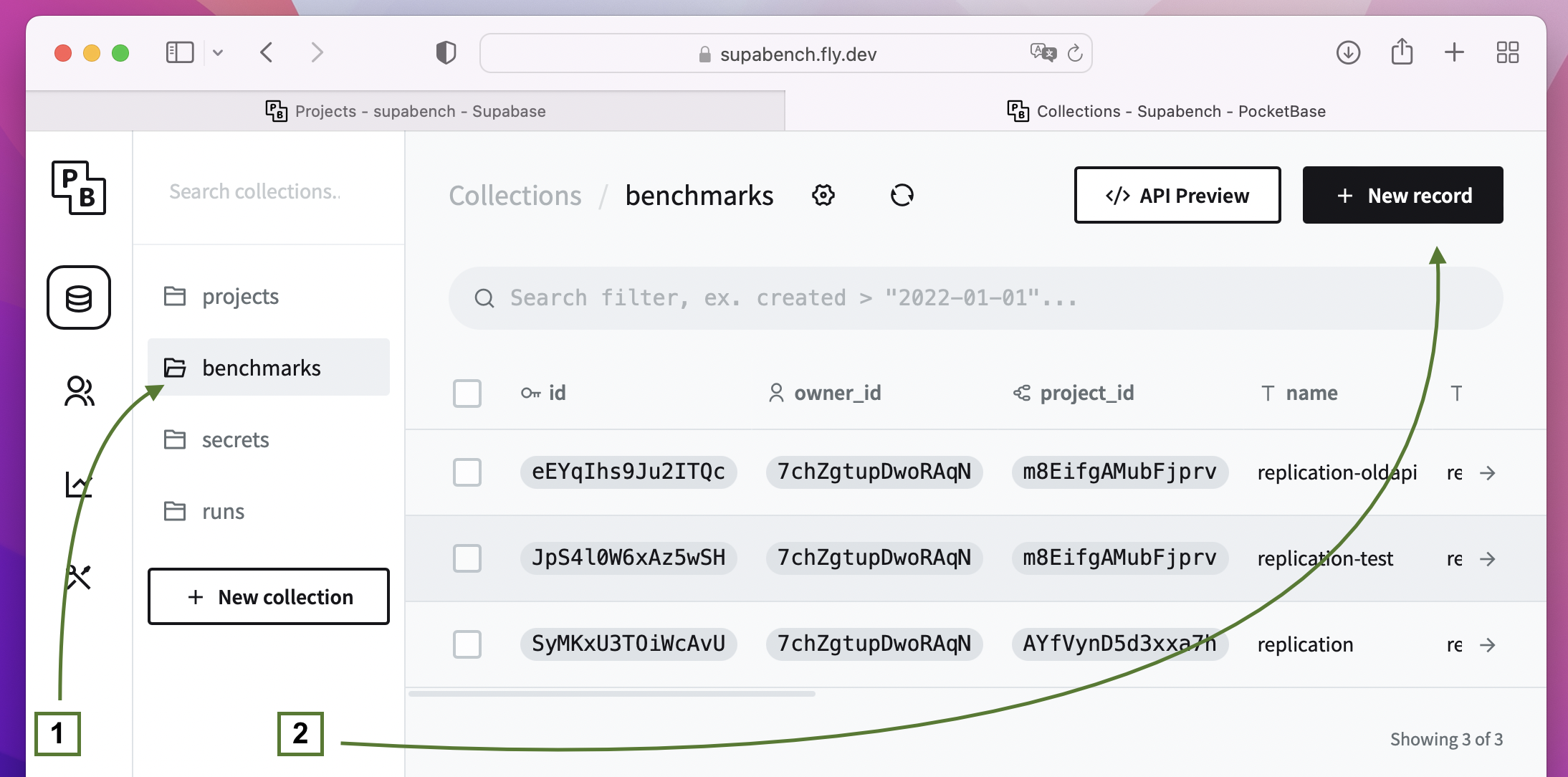

Create a benchmark for the project in the Supabench Admin UI. Go to Collections > Benchmarks and hit a New record button.

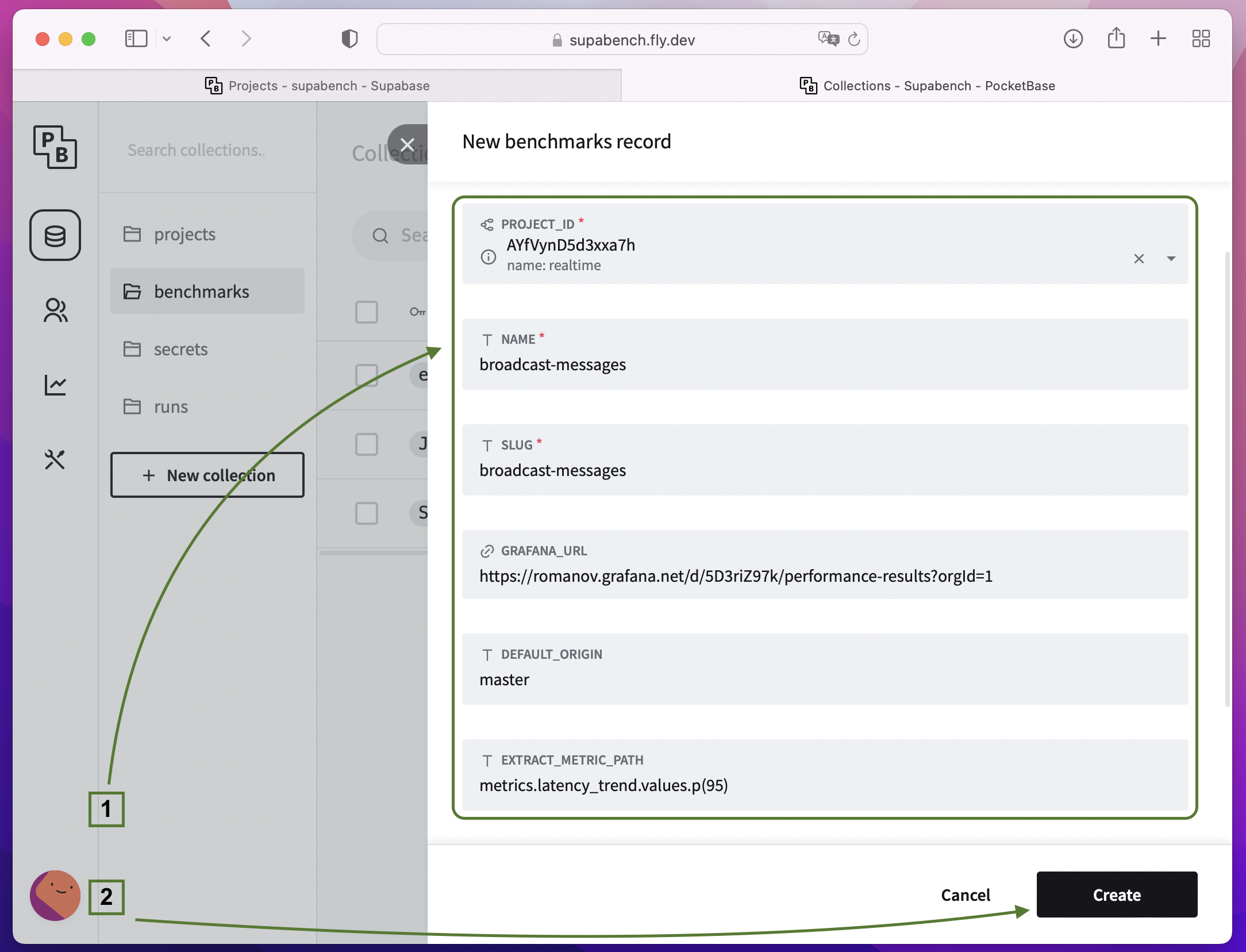

Benchmarks are the performance scenarios that are used to test the project. Projects may have different performance scenarios depending on the typical load profile and environment. Let's create a benchmark to test the Realtime service and check how many broadcast messages throughput one Realtime service can handle.

You will need to specify:

- Project to which this benchmark belongs

- Name of the benchmark

- Grafana URL to the dashboard for this benchmark results (you will also need to build a Grafana dashboard to analyze the results)

- You should also add a JSON path to the primary metric in the benchmark. This is the metric that will be used to compare the results of the benchmark through history. And will be extracted from k6 summary JSON.

Next, we will need to add secrets for the benchmark. Secrets are a place to store data related to the benchmark that should not be exposed to not logged-in users: a set of terraform scripts to run infrastructure and k6 scripts to generate load. And hidden terraform and environment variables.

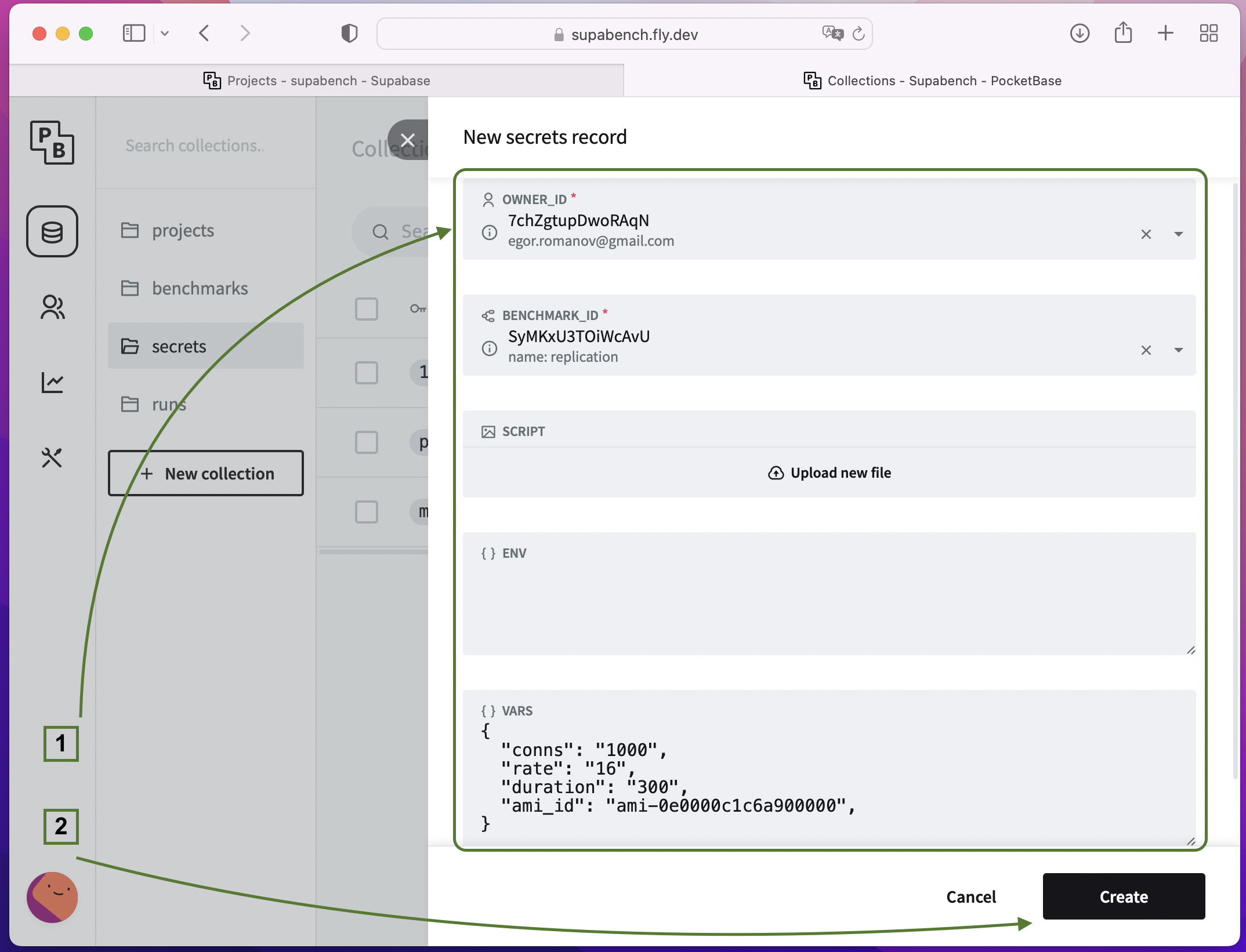

Go to Collections > Secrets and hit a New record button.

You will need to specify:

- Benchmark to which this benchmark belongs

- Upload archive with terraform and k6 scripts to run infrastructure and generate load.

- Env with a JSON containing environment variables for terraform and k6 scripts.

- Vars with a JSON containing variables for terraform scripts.

Create a project in the Supabench User UI. Navigate to your.supabench.url. Log in if you are not logged in yet.

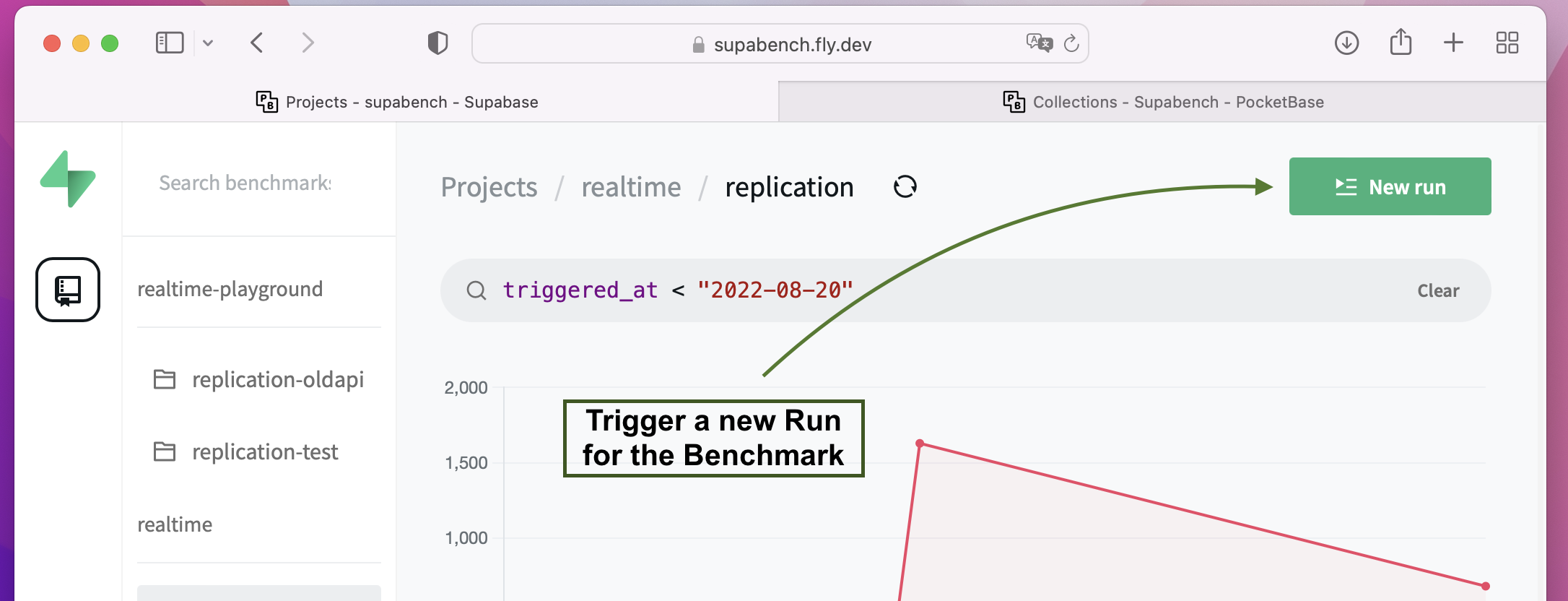

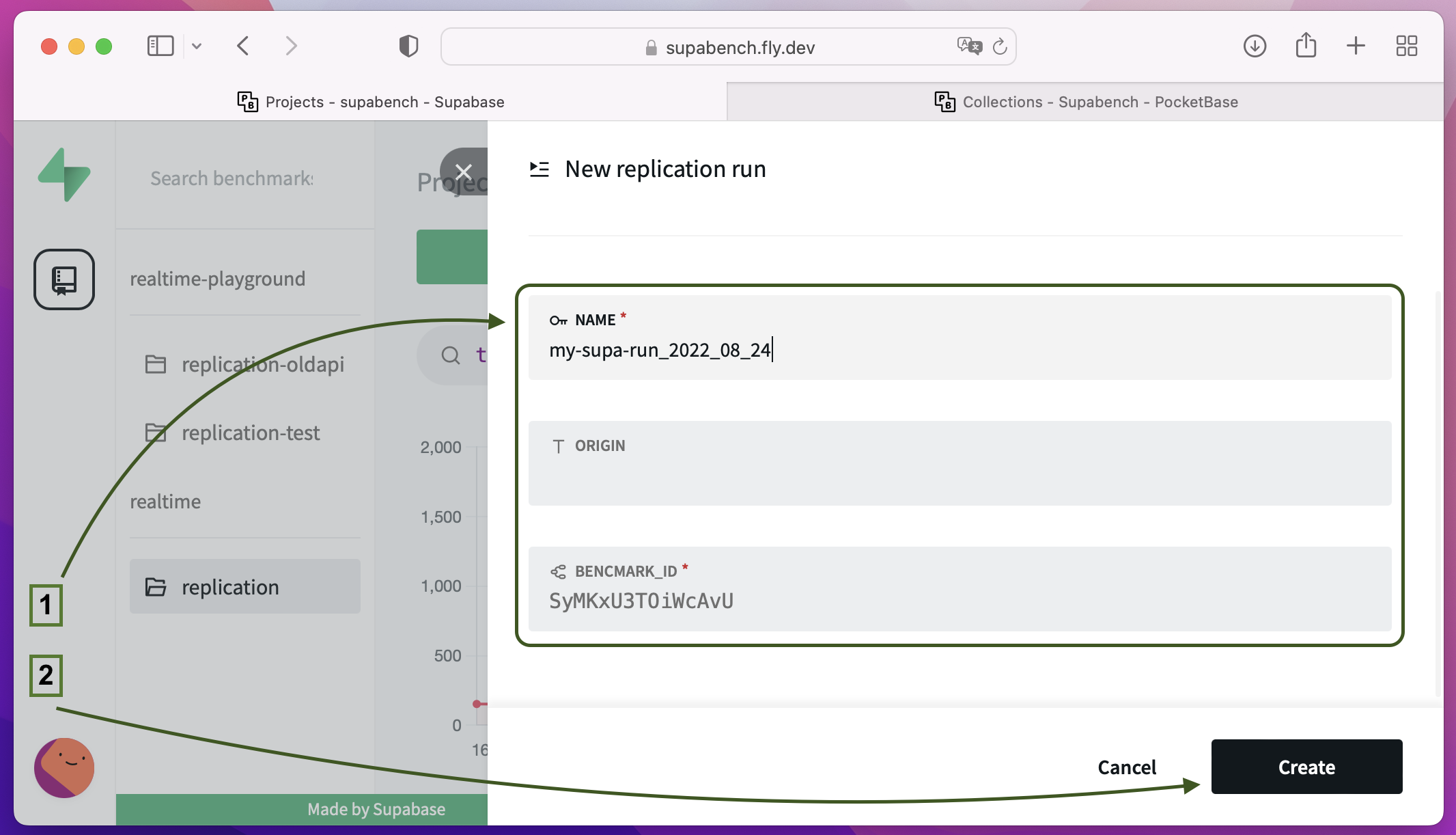

Select the benchmark you want to run and hit the New run button.

Specify the name of the run and smash the Create button.

That's all! You have done it. Now you can trigger new runs for the benchmark again and again.

Under the hood, your new run will be put in the queue. It will be executed as soon as possible by running terraform apply against the terraform scripts you have uploaded on the Add Secrets for Benchmark step.

After the run, the results will be available in the Grafana dashboard with a small summary uploaded to the Supabench User UI.

You may also want to trigger a run from the command line (in the CI pipeline, for example). You can do this by sending an HTTP request to Supabench.

curl --request POST \

--url {supabench.url}/api/runs \

--header 'Authorization: Admin ${JWT_TOKEN}' \

--data '{"benchmark_id":"${BENCHMARK_ID}","name":"my-testrun","origin":"master"}'If you have any issues understanding the terms, you may refer to Terminology.

Made by the Supabase team!