This repository was archived by the owner on Feb 18, 2025. It is now read-only.

-

Notifications

You must be signed in to change notification settings - Fork 941

Orchestrator. New seeding algorithm, support for different seed methods and agent redesign #1120

Open

MaxFedotov

wants to merge

78

commits into

openark:master

Choose a base branch

from

MaxFedotov:orchestrator_agent_dev

base: master

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

…eReplicaHostnameFilters

…ortseed. Add additonal agents info to structs

…are stage wil backupside=source, as there will be active socat waiting for connections

|

Seems like there are some problems with vendoring packages, but I don't have such errors during local build, so I definitely need your help with this :) |

|

I was able to fix build errors, but now it failed on running my tests for seed logic. The thing is that this test requires docker installed and some customization with \etc\hosts file I do not know how to configure this, so maybe exclude this test from running? |

…agent wrong check for err!= nil

Sign up for free

to subscribe to this conversation on GitHub.

Already have an account?

Sign in.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Hi @shlomi-noach,

This PR is an 'orchestrator' part of openark/orchestrator-agent#1

This PR adds following:

We define

seedas an operation, which consists of a set of predefinedstages, where 2orchestrator agentsparticipate - one agent is calledsource agent(so it is on asourceside of seed), another -target agent(so it is on atargetside of seed), and the goal of seed is to transfer all data fromsource agenttotarget agentand addtarget agentas a slave tosource agent.Seedcan be executed using differentseed methods- special ways or programs used to transfer data fromsource agenttotarget agentSeedoperation is completely processed byorchestratorand is ran on scheduled configurable intervals (SeedProcessIntervalSeconds).By design, each

seedoperation consists of 5stages, which are executed one after another:Prepare- is executed on both agentsBackup- is executed either ontargetor onsourceagent depending onseed methodchosenRestore- is executed ontarget agentConnectSlave- is executed ontarget agentCleanup- is executed on both agentsEach of

stagescan be in one of 6statuses:Scheduled- on next seed processing cycle,orchestratorwill call correspondingstageorchestrator-agentAPI (prepare\backup\restore...) andorchestrator-agentwill start executingstage. If API call will be successful,stage statuswill be updated toRunning. If not - it will be updated toErrorRunning- on next seed processing cycle,orchestratorwill callorchestrator-agentAPI to get information aboutstageand save it to a database.Stagewill stay in thisstatusuntilorchestrator-agentwill return that eitherstageisCompleted, and it this caseseedwill be moved to nextstagewithScheduledstatus, or there were some errors duringstageand it'sstatuswill be updated toErrorCompleted- just an informationstatus.Orchestratordo not processseedsin this statusError- on the next seed processing cycle,orchestratorwill check, if the number of timesstagewas in thisstatusis not more than a configurable threshold (MaxRetriesForSeedStage). If it is more -seedwill be marked asFailedand won't be processed. If it is lessseedwill be restarted - moved to aScheduledstatus of the currentstage(one exception from this rule are errors duringBackup stage. If an error was on thisstage-seedwill be restarted fromPrepare stage, as some of theseed methodsdo important things on thisstage- for example, socat is started ontarget agentduringPreparestage). Ifstagewas executed on both agents, and only one agent had an error during executionorchestratorwill abortstageon the second agent before restarting itFailed- just an informationstatus.Orchestratordo not processseedsin this statusAborting- if user hits anAbortbutton in UI or usesAbortAPI,stagewill be moved to thisstatus.Orchestratorwill try to call abort API on an agent (or both agents, if thisstageincludes them both). If it will be successful -seedwill be marked asFailedand won't be processed. If not - on the next seed process cycleorchestratorwill try to abort it one more time until it succeeds.Seedis consideredActive(that means it should be processed byorchestrator) only ifstageis inScheduled,Running,ErrororAbortingstatuses.All

seedprocessing is done using this functionWhich starts a new goroutine depending on

statusfor each of the Activeseedsand waits until all of them will complete.So the overall process will look like:

/agent-seed/:seedMethod/:targetHost/:sourceHost'orchestrator' API is called. Different pre-seed checks are done and if they are successful, newseedis created. It will be inScheduledstatus inPreparestage/api/prepare/:seedID/:seedMethod/:seedSideon bothorchestrator-agentsand it these calls will be successful - it will updatePrepare stagestatus toRunningfor both agents/api/seed-stage-state/:seedID/:seedStageon bothorchestrator-agentsand log the result. If both agents return, that they completedPrepare stage, it will be marked asCompletedandseedwill be moved toBackupstage inScheduledstatus. If one of the agents hadn't completedstage,seedwill be still on thisstageand during next ProcessSeeds() function call processRunning() function will be run again, and it will call/api/seed-stage-state/:seedID/:seedStageon remaining agent. The process will be continued until both agents completestage/api/backup/:seedID/:seedMethod/:seedHost/:mysqlPorton one of the agents depending onseed methodchosen. If this call will be successful - it will updateBackup stagestatus toRunningfor an agent./api/seed-stage-state/:seedID/:seedStageon an agent. If an agent return that it completed theBackup stageit will be marked asCompletedandseedwill be moved toRestorestage inScheduledstatus.So basically this works like a state-machine, which moves

seedfrom start to finish using following path:New seed->Prepare scheduled->Prepare running->Prepare completed->Backup scheduled->Backup running->Backup completed->Restore scheduled->Restore running->Restore completed->ConnectSlave scheduled->ConnectSlave running->ConnectSlave completed->Cleanup scheduled->Cleanup running->Cleanup completed->Seed completedI've also created different test cases, which are located in

agent_test.goand simulates all this logic using docker andorchestator-agentsmocks.With this PR

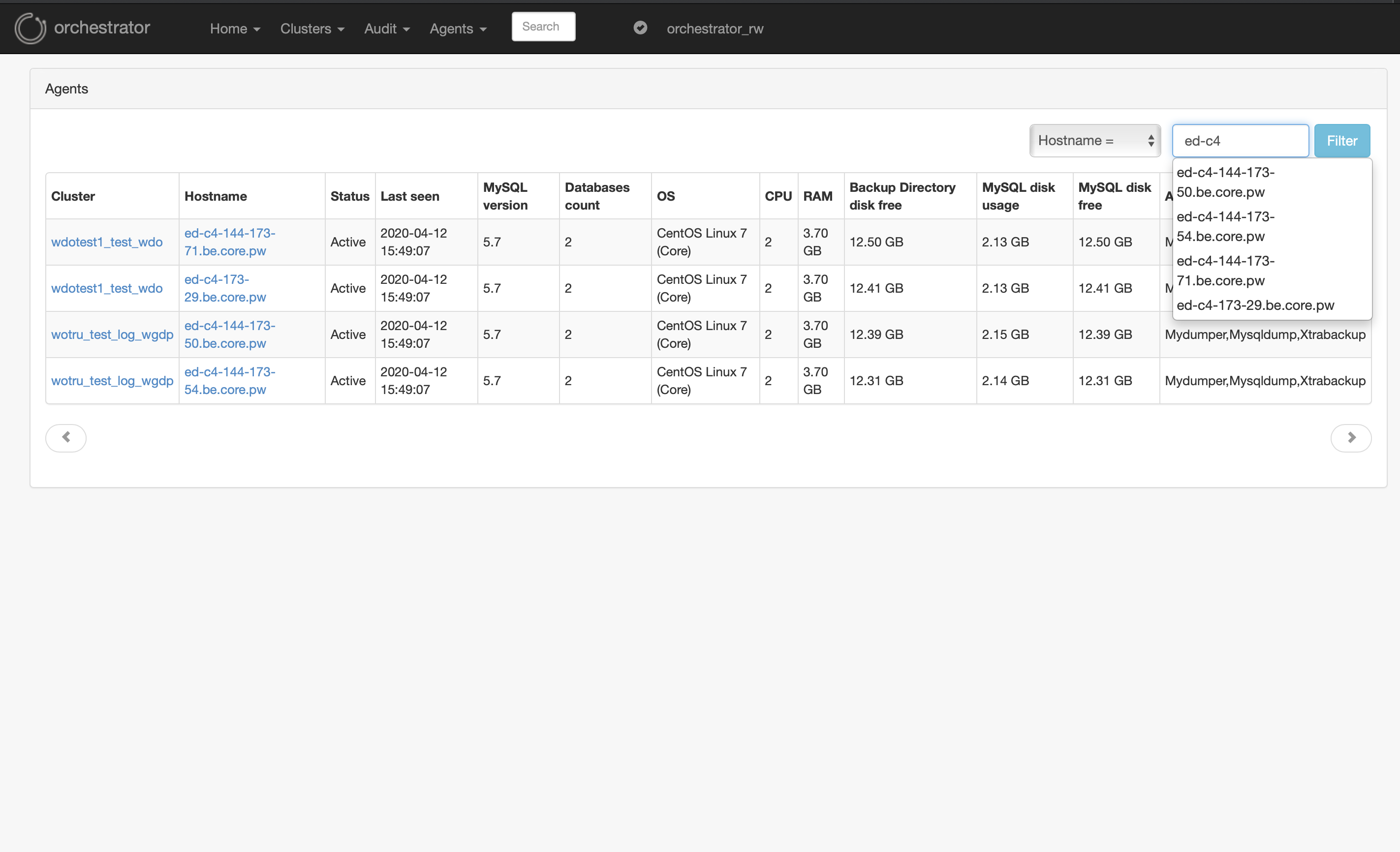

orchestratorUI is also updated to support all changes. I've tried to keep all previous functions regarding LVM\local snapshot hosts\remote snapshot hosts as much backward-compatible, as possible.New UI includes agent page with various filters with auto-complete

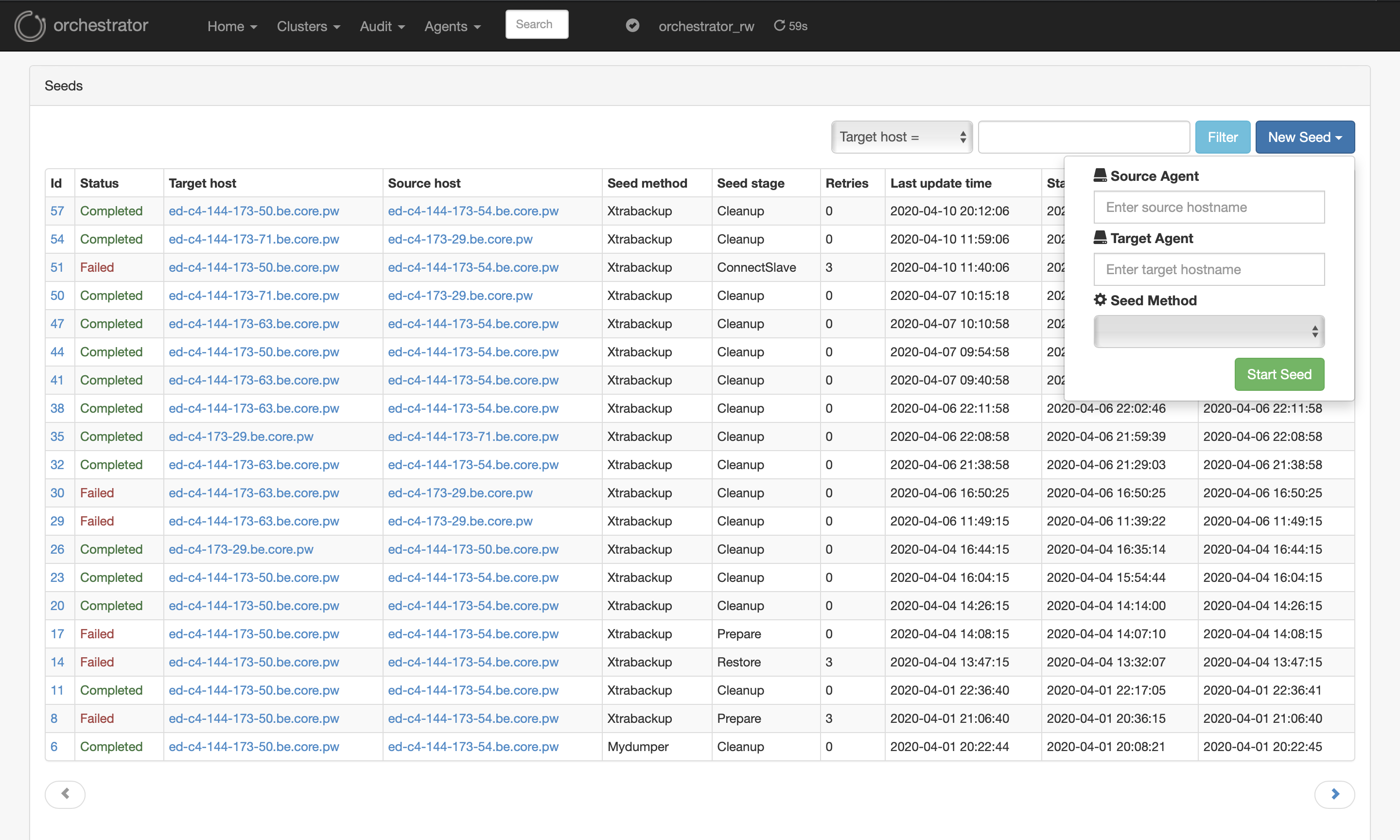

Updated seeds page with filters with auto-complete and UI for starting new seed

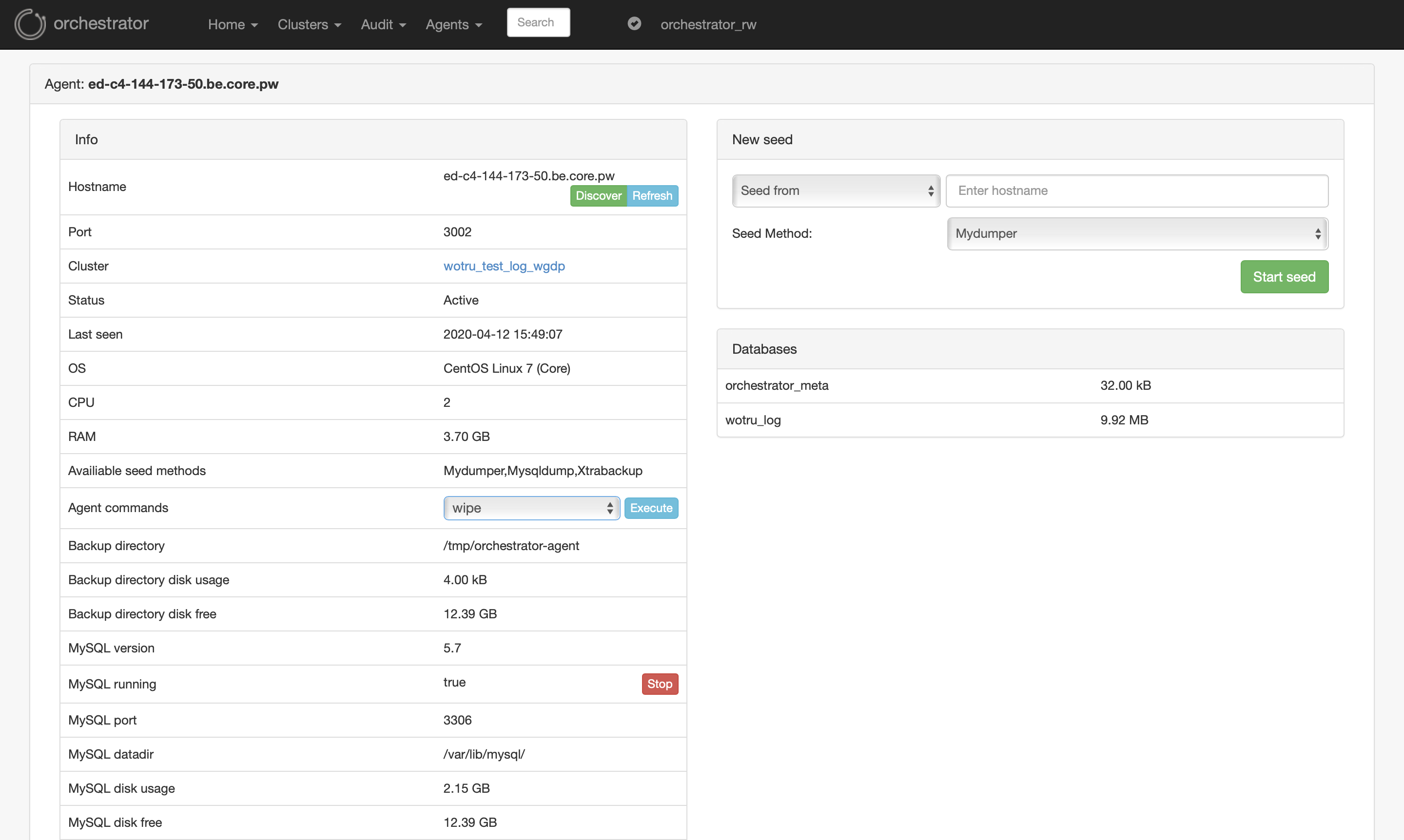

Updated agent page (some of LVM specific UI elements are not shown because this agent do not have LVM seed method added)

We had already created our own auto-provisioning solution based on

orchestrator,orchestrator-agentandpuppet, where new hosts are automatically added toclusteras slaves. We started testing in on our staging environment, but I will be still considering this functionality in the beta stage, as there are obviously be some bugs, which we will catch with active production usage.But I will be very grateful for your feedback and comments and will be glad to answer all questions :)

Thanks,

Max