A professional-grade machine learning pipeline that demonstrates ETL (Extract-Transform-Load) processes, model training, and deployment using modern best practices.

ml_challenge/

│── data/ # Sample input/output data

│ └── user_logs.json # Example JSON dataset

│

│── src/ # Source code modules

│ ├── __init__.py # Package initialization

│ ├── ingest.py # Data loading and validation

│ ├── transform.py # Data transformation and feature engineering

│ ├── train.py # Model training and evaluation

│ ├── serialize.py # Model persistence and versioning

│ ├── api.py # FastAPI web service for predictions

│ └── pipeline.py # Main ETL + ML workflow orchestrator

│

│── tests/ # Unit tests

│ ├── test_ingest.py # Data ingestion tests

│ └── test_transform.py # Data transformation tests

│

│── models/ # Trained model artifacts

│── Dockerfile # ML container definition

│── docker-compose.yml # Service orchestration

│── requirements.txt # Python dependencies

│── generate_sample_data.py # Sample data generator

│── README.md # This file

- Professional Code Structure: Modular design with clear separation of concerns

- Comprehensive ETL Pipeline: Data ingestion, validation, transformation, and aggregation

- Advanced Feature Engineering: Time-series features, lag variables, rolling averages

- Multiple ML Models: Linear Regression and Random Forest with cross-validation

- Model Versioning: Comprehensive model serialization with metadata and integrity checks

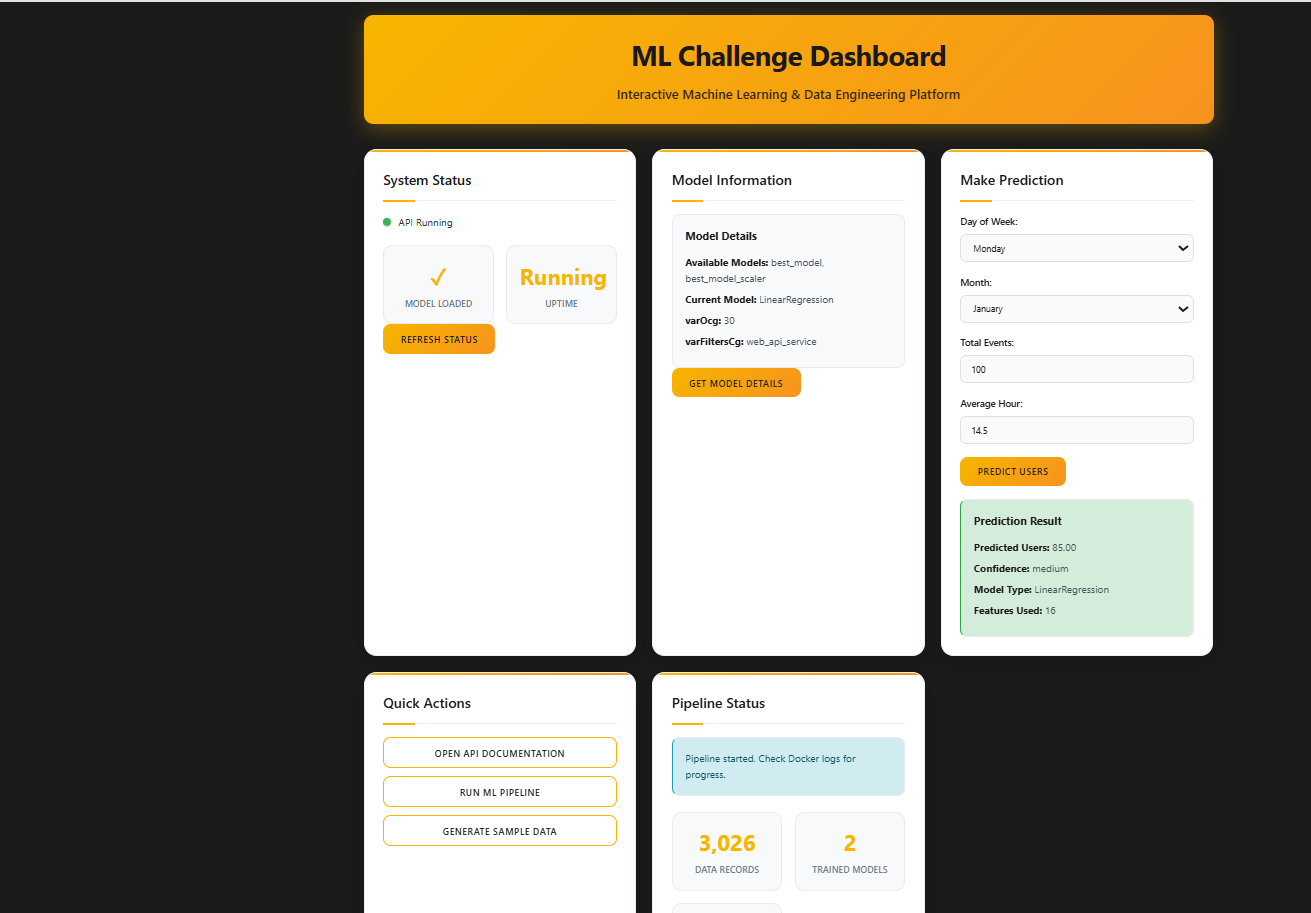

- RESTful API: FastAPI-based web service for real-time predictions

- Containerization: Docker support with health checks and orchestration

- Testing: Unit tests with pytest framework

- Logging: Comprehensive logging throughout the pipeline

- Error Handling: Robust error handling with custom exceptions

- Python 3.11+

- Docker and Docker Compose (for containerized deployment)

- 4GB+ RAM (for model training)

-

Clone the repository

git clone <your-repo-url> cd ml_challenge

-

Create virtual environment

python -m venv venv source venv/bin/activate # On Windows: venv\Scripts\activate

-

Install dependencies

pip install -r requirements.txt

- Build and run with Docker Compose

docker-compose up --build

python generate_sample_data.pyThis creates data/user_logs.json with sample user activity data.

python -m src.pipelineThis executes the full ETL + ML workflow:

- Data ingestion and validation

- Data transformation and feature engineering

- Model training and evaluation

- Model serialization

python -m uvicorn src.api:app --host 0.0.0.0 --port 5000Access the API at:

- API Documentation: http://localhost:5000/docs

- Health Check: http://localhost:5000/health

- Root Endpoint: http://localhost:5000/

The pipeline can be configured through the MLPipeline class:

config = {

'data_path': 'data/user_logs.json',

'model_dir': 'models',

'timeframe_days': 30,

'test_size': 0.2,

'random_state': 42

}

pipeline = MLPipeline(config)

pipeline.run_pipeline()curl -X POST "http://localhost:5000/predict" \

-H "Content-Type: application/json" \

-d '{

"day_of_week": 1,

"month": 6,

"is_weekend": 0,

"total_events": 85,

"avg_hour": 14.5,

"rolling_7d_users": 120.3,

"rolling_7d_events": 95.7

}'curl -X POST "http://localhost:5000/batch-predict" \

-H "Content-Type: application/json" \

-d '[

{

"day_of_week": 1,

"month": 6,

"is_weekend": 0,

"total_events": 85,

"avg_hour": 14.5,

"rolling_7d_users": 120.3,

"rolling_7d_events": 95.7

}

]'Run the test suite:

# Run all tests

pytest

# Run with coverage

pytest --cov=src

# Run specific test file

pytest tests/test_ingest.py# Build the image

docker build -t ml-challenge .

# Run the container

docker run -p 5000:5000 ml-challenge

# Run with Docker Compose

docker-compose up --build# Run only the API service

docker-compose up ml-api

# Run the data processing pipeline

docker-compose --profile pipeline up

# Generate sample data

docker-compose --profile data-gen up data-generator- JSON data loading with validation

- Data quality checks

- Summary statistics generation

- Timestamp parsing and feature extraction

- Timeframe filtering (configurable via

varOcg) - Daily aggregation and metrics calculation

- Advanced feature engineering (lag, rolling averages, trends)

- Feature preparation and scaling

- Multiple model training (Linear Regression, Random Forest)

- Cross-validation and evaluation metrics

- Model comparison and selection

- Model persistence with joblib

- Metadata tracking and versioning

- File integrity checks

- Backup and restoration capabilities

- FastAPI-based REST service

- Real-time predictions

- Batch processing support

- Health monitoring and model status

- End-to-end workflow coordination

- Step-by-step execution

- State tracking and logging

- Error handling and recovery

The ML pipeline trains multiple models and automatically selects the best performing one for production use.

-

Linear Regression (

sklearn.linear_model.LinearRegression)- Fast, interpretable, good for linear relationships

- Uses 16 engineered features

- Suitable for continuous target prediction

-

Random Forest (

sklearn.ensemble.RandomForestRegressor)- Robust, handles non-linear relationships

- Feature importance analysis

- Good for complex patterns

The system automatically selects the best model based on:

- Cross-validation scores (5-fold CV)

- Multiple metrics: RMSE, R², MAE, MAPE

- Performance consistency across folds

- Computational efficiency considerations

- Selected Model: Linear Regression

- Model Files:

best_model.joblib- Trained modelbest_model_scaler.joblib- Feature scaler

- Features Used: 16 engineered features

- Training Data: 3,026 records

- Model Performance: Available in

models/best_model_results.json

The model uses 16 carefully engineered features:

- Temporal: day_of_week, month, is_weekend

- Event-based: total_events, avg_hour, std_hour

- Behavioral: weekend_ratio, rolling averages

- Historical: lag features, moving averages, trends

- Serialization: Using

joblibfor efficient storage - Versioning: Model metadata and performance tracked

- Integrity: SHA-256 hash verification

- Backup: Automatic backup before updates

- Real-time Predictions: Single and batch prediction endpoints

- Model Status: Health checks and model information

- Feature Validation: Input validation for all 16 features

- Error Handling: Graceful degradation on model issues

- Pipeline State: Stored in

pipeline_state.json - Logs: Written to

pipeline.logand console - Model Artifacts: Stored in

models/directory - Health Checks: Available at

/healthendpoint

The pipeline includes comprehensive error handling:

- Custom Exceptions: Specific error types for each module

- Graceful Degradation: Pipeline continues with available data

- Detailed Logging: Comprehensive error information

- State Persistence: Pipeline state saved even on failure

As required by the challenge:

__define-ocg__: Present in all module commentsvarOcg: Set to 30 (timeframe in days)varFiltersCg: Context-specific identifier for each module

- Create new module in

src/directory - Add tests in

tests/directory - Update requirements.txt if new dependencies needed

- Update Dockerfile if system dependencies required

- Follow PEP 8 guidelines

- Include comprehensive docstrings

- Use type hints throughout

- Implement proper error handling

- Fork the repository

- Create a feature branch

- Make your changes

- Add tests for new functionality

- Submit a pull request

This project is part of the ML & Data Engineering Challenge.

For issues and questions:

- Check the logs in

pipeline.log - Review the API documentation at

/docs - Check the pipeline state in

pipeline_state.json - Verify Docker container health with

docker-compose ps