forked from mymagicpower/AIAS

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

1 parent

6c93003

commit c39080e

Showing

92 changed files

with

11,353 additions

and

125 deletions.

There are no files selected for viewing

Large diffs are not rendered by default.

Oops, something went wrong.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,97 @@ | ||

|

|

||

| ### Download the model and place it in the /models directory | ||

| - Link: https://github.com/mymagicpower/AIAS/releases/download/apps/crowdnet.zip | ||

|

|

||

| ### Crowd Density Detection SDK | ||

| The CrowdNet model is a crowd density estimation model proposed in 2016. The paper is "CrowdNet: A Deep Convolutional Network for DenseCrowd Counting". The CrowdNet model is mainly composed of deep convolutional neural networks and shallow convolutional neural networks. It is trained by inputting the original image and the density map obtained by the Gaussian filter. Finally, the model estimates the number of people in the image. Of course, this can not only be used for crowd density estimation, theoretically, density estimation of other animals, etc. should also be possible. | ||

|

|

||

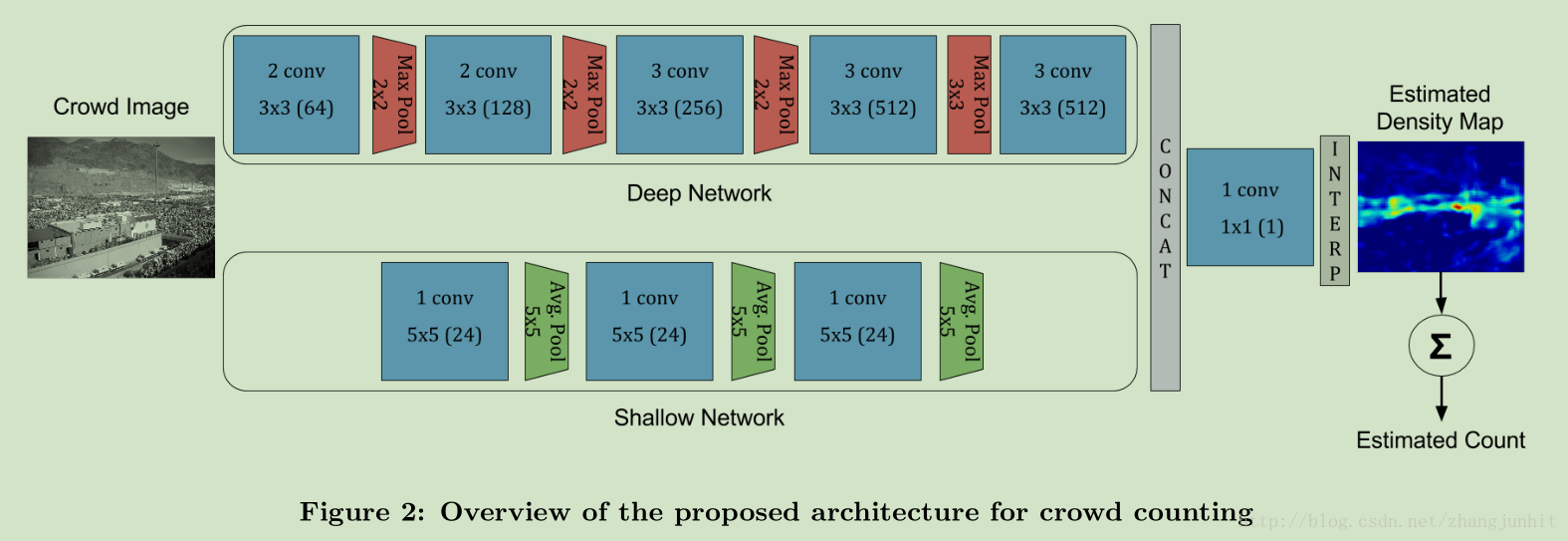

| The following is the structural diagram of the CrowdNet model. From the structural diagram, it can be seen that the CrowdNet model is composed of a deep convolutional network (Deep Network) and a shallow convolutional network (Shallow Network). The two groups of networks are spliced into one network and then input into a convolutional layer with a convolutional kernel size of 1. Finally, a density map data is obtained through interpolation, and the estimated number of people can be obtained by counting this density. | ||

|

|

||

|  | ||

|

|

||

| ### SDK functions: | ||

| - Calculate the number of people | ||

| - Calculate the density map | ||

|

|

||

| ### Running Example- CrowdDetectExample | ||

| - Test picture | ||

|  | ||

|

|

||

| - Example code: | ||

| ```text | ||

| Path imageFile = Paths.get("src/test/resources/crowd1.jpg"); | ||

| Image image = ImageFactory.getInstance().fromFile(imageFile); | ||

| Criteria<Image, NDList> criteria = new CrowdDetect().criteria(); | ||

| try (ZooModel model = ModelZoo.loadModel(criteria); | ||

| Predictor<Image, NDList> predictor = model.newPredictor()) { | ||

| NDList list = predictor.predict(image); | ||

| //person quantity | ||

| float q = list.get(1).toFloatArray()[0]; | ||

| int quantity = (int)(Math.abs(q) + 0.5); | ||

| logger.info("人数 quantity: {}", quantity); | ||

| NDArray densityArray = list.get(0); | ||

| logger.info("density: {}", densityArray.toDebugString(1000000000, 1000, 1000, 1000)); | ||

| ``` | ||

|

|

||

|

|

||

| - After the operation is successful, the command line should see the following information: | ||

| ```text | ||

| [INFO ] - Person quantity: 11 | ||

| [INFO ] - Density: ND: (1, 1, 80, 60) cpu() float32 | ||

| [ | ||

| [ 4.56512964e-04, 2.19504116e-04, 3.44428350e-04, ..., -1.44560239e-04, 1.58709008e-04], | ||

| [ 9.59073077e-05, 2.53924576e-04, 2.51444580e-04, ..., -1.64886122e-04, 1.14555296e-04], | ||

| [ 6.42040512e-04, 5.44962648e-04, 4.95903892e-04, ..., -1.15299714e-04, 3.01052118e-04], | ||

| [ 1.58930803e-03, 1.43694575e-03, 7.95312808e-04, ..., 1.44582940e-04, 4.20258410e-04], | ||

| .... | ||

| [ 2.21548311e-04, 2.92199198e-04, 3.05847381e-04, ..., 6.77200791e-04, 2.88001203e-04], | ||

| [ 5.04880096e-04, 2.36357562e-04, 1.90203893e-04, ..., 8.42695648e-04, 2.92608514e-04], | ||

| [ 1.45231024e-04, 1.56763941e-04, 2.12623156e-04, ..., 4.69507067e-04, 1.36347953e-04], | ||

| [ 5.02332812e-04, 2.98928004e-04, 3.34762561e-04, ..., 4.80025599e-04, 2.72601028e-04], | ||

| ] | ||

| ``` | ||

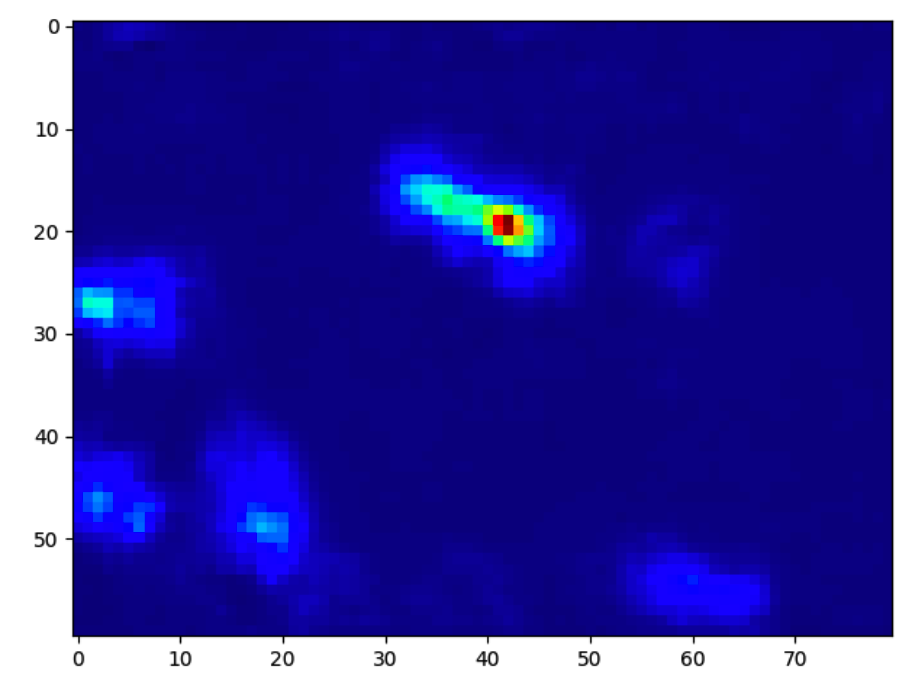

| #### Density map | ||

|  | ||

|

|

||

|

|

||

| ### Open source algorithm | ||

| #### 1. Open source algorithm used by SDK | ||

| - [PaddlePaddle-CrowdNet](https://github.com/yeyupiaoling/PaddlePaddle-CrowdNet) | ||

| #### 2. How to export the model? | ||

| - [how_to_create_paddlepaddle_model](http://docs.djl.ai/docs/paddlepaddle/how_to_create_paddlepaddle_model_zh.html) | ||

| - export_model.py | ||

| ```text | ||

| import paddle | ||

| import paddle.fluid as fluid | ||

| INFER_MODEL = 'infer_model/' | ||

| def save_pretrained(dirname='infer/', model_filename=None, params_filename=None, combined=True): | ||

| if combined: | ||

| model_filename = "__model__" if not model_filename else model_filename | ||

| params_filename = "__params__" if not params_filename else params_filename | ||

| place = fluid.CPUPlace() | ||

| exe = fluid.Executor(place) | ||

| program, feeded_var_names, target_vars = fluid.io.load_inference_model(INFER_MODEL, executor=exe) | ||

| fluid.io.save_inference_model( | ||

| dirname=dirname, | ||

| main_program=program, | ||

| executor=exe, | ||

| feeded_var_names=feeded_var_names, | ||

| target_vars=target_vars, | ||

| model_filename=model_filename, | ||

| params_filename=params_filename) | ||

| if __name__ == '__main__': | ||

| paddle.enable_static() | ||

| save_pretrained() | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,102 @@ | ||

| #### 人脸工具箱 SDK | ||

|

|

||

| ### 官网: | ||

| [官网链接](http://www.aias.top/) | ||

|

|

||

| #### 人脸识别 | ||

| 广义的人脸识别实际包括构建人脸识别系统的一系列相关技术,包括人脸图像采集、人脸定位、人脸识别预处理、身份确认以及身份查找等; | ||

| 而狭义的人脸识别特指通过人脸进行身份确认或者身份查找的技术或系统。 | ||

| 人脸识别是一项热门的计算机技术研究领域,它属于生物特征识别技术,是对生物体(一般特指人)本身的生物特征来区分生物体个体。 | ||

| 生物特征识别技术所研究的生物特征包括脸、指纹、手掌纹、虹膜、视网膜、声音(语音)、体形、个人习惯(例如敲击键盘的力度和频率、签字)等, | ||

| 相应的识别技术就有人脸识别、指纹识别、掌纹识别、虹膜识别、视网膜识别、语音识别(用语音识别可以进行身份识别,也可以进行语音内容的识别, | ||

| 只有前者属于生物特征识别技术)、体形识别、键盘敲击识别、签字识别等。 | ||

|

|

||

| #### 行业现状 | ||

| 人脸识别技术目前已经广泛应用于包括人脸门禁系统、刷脸支付等各行各业。随着人脸识别技术的提升,应用越来越广泛。目前中国的人脸识 | ||

| 别技术已经在世界水平上处于领先地位,在安防行业,国内主流安防厂家也都推出了各自的人脸识别产品和解决方案,泛安防行业是人脸识别技术主要应用领域。 | ||

|

|

||

| #### 技术发展趋势 | ||

| 目前人脸识别技术广泛采用的是基于神经网络的深度学习模型。利用深度学习提取出的人脸特征,相比于传统技术,能够提取更多的特征, | ||

| 更能表达人脸之间的相关性,能够显著提高算法的精度。近些年大数据技术以及算力都得到了大幅提升,而深度学习非常依赖于大数据与算力, | ||

| 这也是为什么这项技术在近几年取得突破的原因。更多更丰富的数据加入到训练模型中,意味着算法模型更加通用,更贴近现实世界。另一方面,算力的提升, | ||

| 使得模型可以有更深的层级结构,同时深度学习的理论模型本身也在不断的完善中,模型本身的优化将会极大地提高人脸识别的技术水平。 | ||

|

|

||

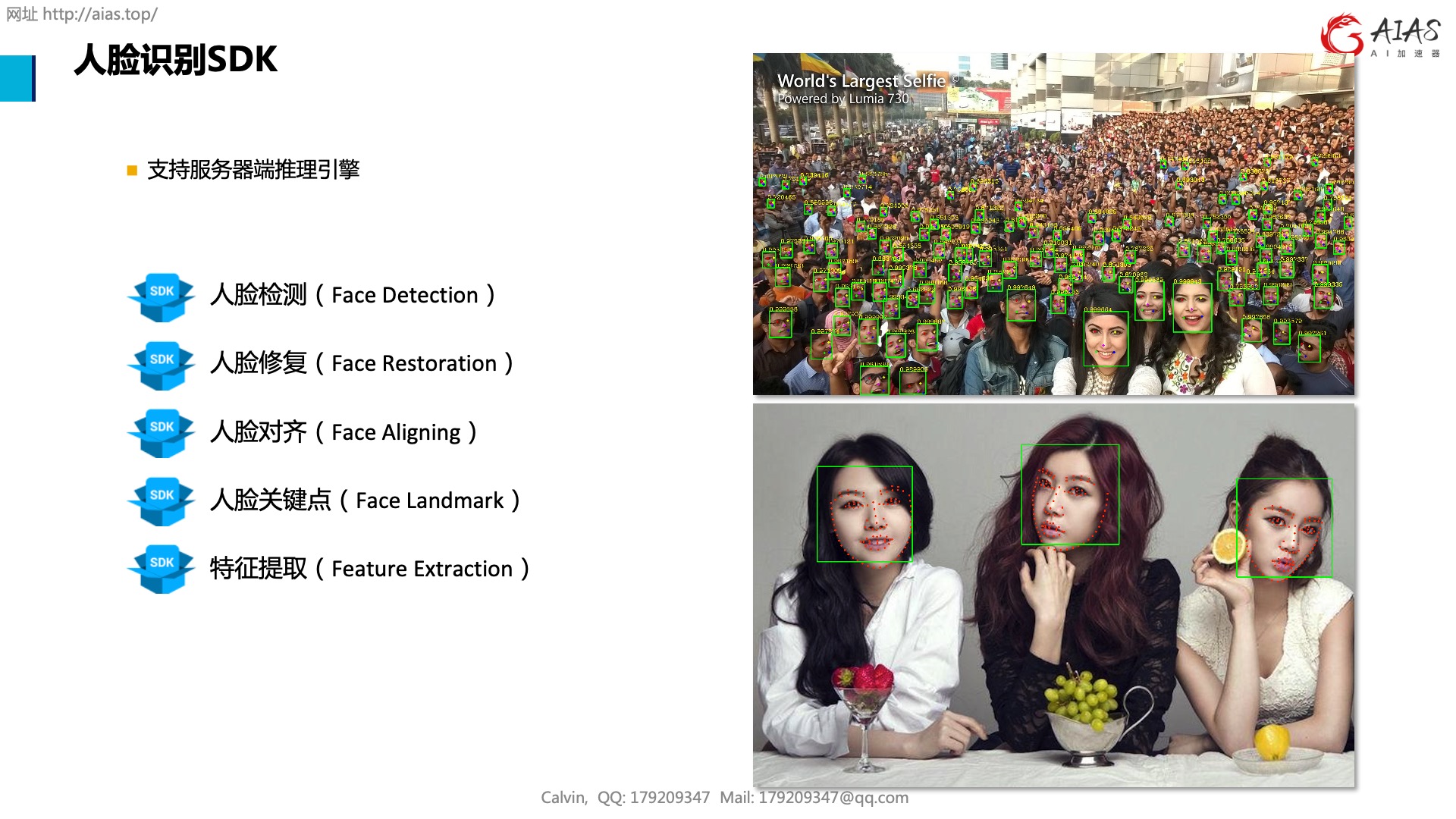

| #### 人脸识别关键技术 | ||

| 人脸识别涉及的关键技术包含:人脸检测,人脸关键点,人脸特征提取,人脸比对,人脸对齐。 | ||

|  | ||

|

|

||

| ### 1. 人脸检测(含5个人脸关键点)SDK - face_detection_sdk | ||

| 人脸检测(含5个人脸关键点)提供了两个模型的实现: | ||

| #### 小模型: | ||

| 模型推理例子代码: LightFaceDetectionExample.java | ||

|

|

||

| #### 大模型: | ||

| 模型推理例子代码: RetinaFaceDetectionExample.java | ||

|

|

||

| #### 运行人脸检测的例子: | ||

| 1. 运行成功后,命令行应该看到下面的信息: | ||

| ```text | ||

| [INFO ] - Face detection result image has been saved in: build/output/retinaface_detected.png | ||

| [INFO ] - [ | ||

| class: "Face", probability: 0.99993, bounds: [x=0.552, y=0.762, width=0.071, height=0.156] | ||

| class: "Face", probability: 0.99992, bounds: [x=0.696, y=0.665, width=0.071, height=0.155] | ||

| class: "Face", probability: 0.99976, bounds: [x=0.176, y=0.778, width=0.033, height=0.073] | ||

| class: "Face", probability: 0.99961, bounds: [x=0.934, y=0.686, width=0.032, height=0.068] | ||

| class: "Face", probability: 0.99949, bounds: [x=0.026, y=0.756, width=0.039, height=0.078] | ||

| ] | ||

| ``` | ||

| 2. 输出图片效果如下: | ||

|  | ||

|

|

||

| ### 2. 人脸对齐 SDK - face_alignment_sdk | ||

| #### 运行人脸检测的例子 FaceAlignExample.java | ||

| 运行成功后,输出图片效果如下: | ||

|  | ||

|

|

||

| ### 3. 人脸特征提取与比对SDK - face_feature_sdk | ||

| #### 3.1 人脸特征提取 - FeatureExtractionExample | ||

| 运行成功后,命令行应该看到下面的信息: | ||

| ```text | ||

| [INFO ] - Face feature: [-0.04026184, -0.019486362, -0.09802659, 0.01700999, 0.037829027, ...] | ||

| ``` | ||

|

|

||

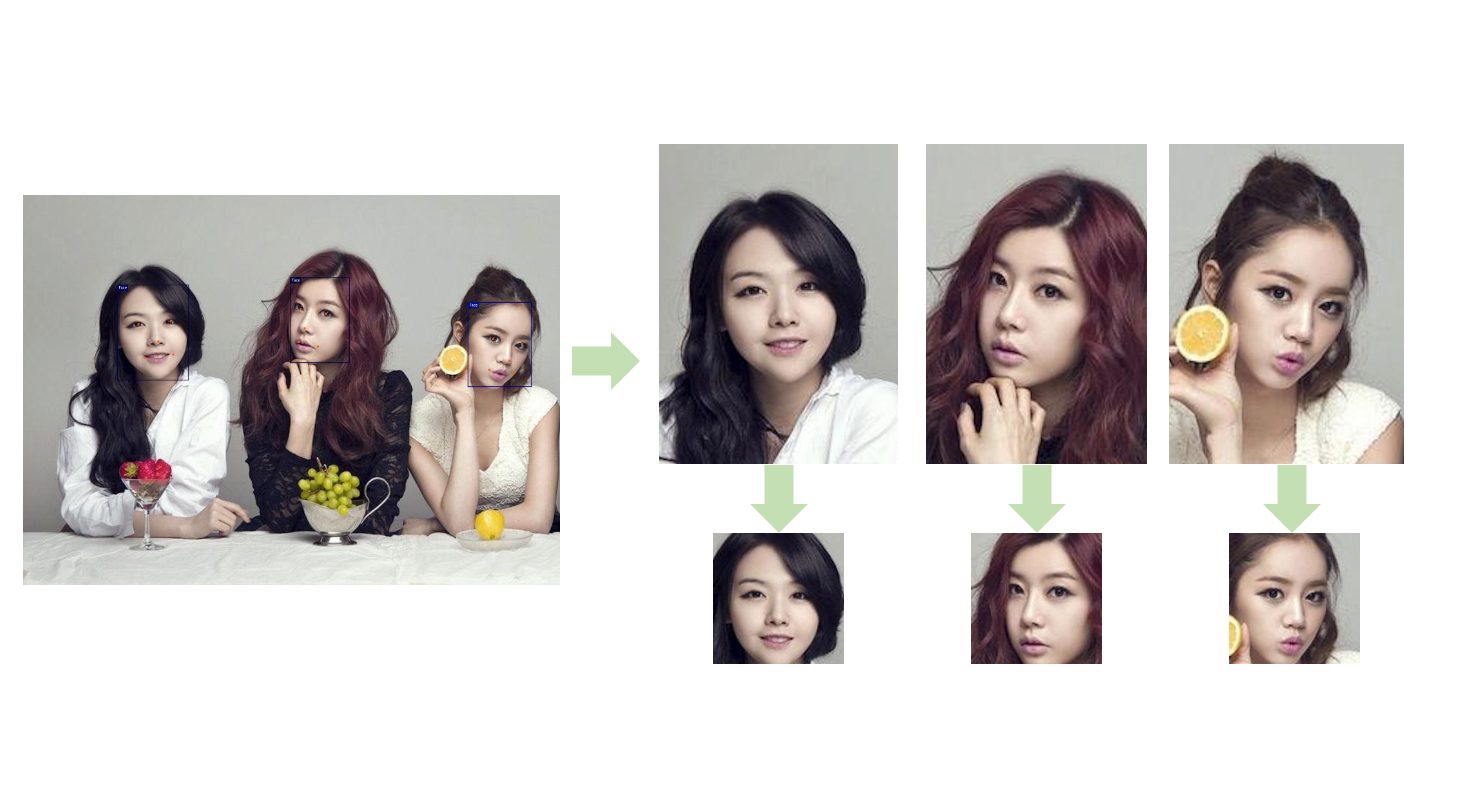

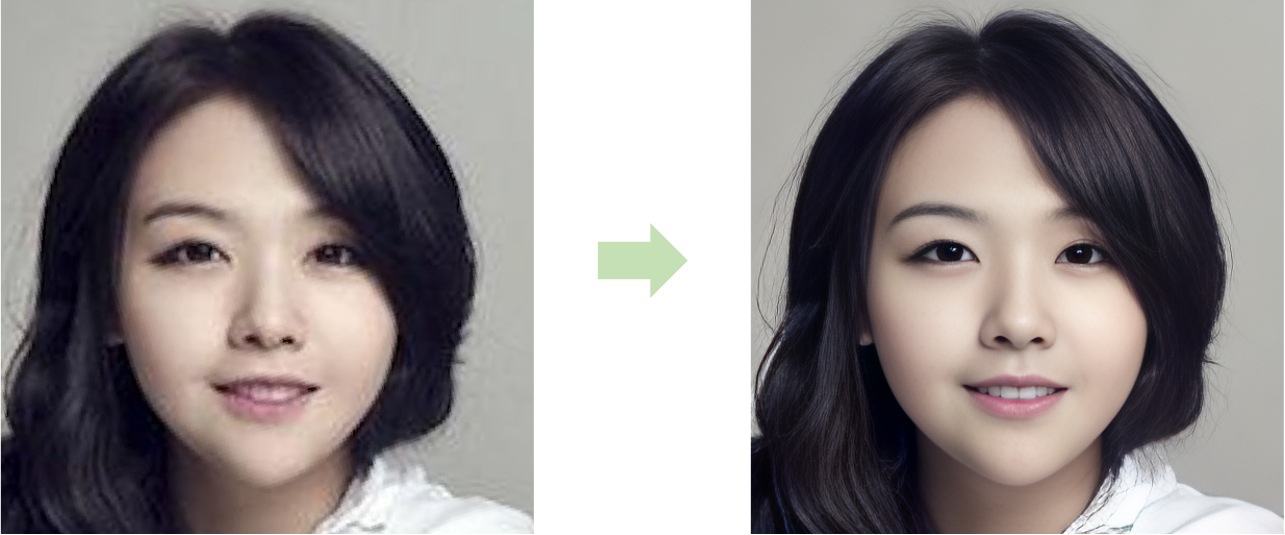

| ##### 3.2 人脸特征比对 - FeatureComparisonExample | ||

| 人脸识别完整的pipeline:人脸检测(含人脸关键点) --> 人脸对齐 --> 人脸特征提取 --> 人脸比对 | ||

| - 首先检测人脸 | ||

| - 然后根据人脸关键点转正对齐 | ||

| - 提取特征比较相似度 | ||

|  | ||

|

|

||

| 运行成功后,命令行应该看到下面的信息: | ||

| 比对使用的是欧式距离的计算方式。 | ||

|

|

||

| ```text | ||

| [INFO ] - face1 feature: [0.19923544, 0.2091935, -0.17899065, ..., 0.7100589, -0.27192503, 1.1901716] | ||

| [INFO ] - face2 feature: [0.1881579, -0.40177754, -0.19950306, ..., -0.71886086, 0.31257823, -0.009294844] | ||

| [INFO ] - kana1 - kana2 Similarity: 0.68710256 | ||

| ``` | ||

| 提取特征计算相似度。可以进一步对112 * 112 人脸图片按比例裁剪,去除冗余信息,比如头发等,以提高精度。 | ||

| 如果图片模糊,可以结合人脸超分辨模型使用。 | ||

|

|

||

| ### 4. 人脸分辨率提升SDK - face_sr_sdk | ||

| #### 4.1 单张人脸图片超分辨 - GFPExample | ||

| - 测试图片(左侧原图,右侧效果) | ||

|  | ||

|

|

||

| #### 4.2 多人脸超分辨(自动检测人脸,然后对齐人脸后提升分辨率 - FaceSrExample | ||

| - 自动检测人脸及关键地,然后抠图,然后根据人脸关键点转正对齐 | ||

|  | ||

|

|

||

| - 对所有转正对齐的人脸提升分辨率 | ||

|  | ||

|

|

||

|

|

||

| ### 5. 图片人脸修复 SDK - face_restoration_sdk | ||

| - 自动检测人脸及关键地,然后抠图,然后根据人脸关键点转正对齐。 | ||

| - 对所有转正对齐的人脸提升分辨率。 | ||

| - 使用分割模型提取人脸,逆向变换后贴回原图。 | ||

|

|

||

| #### 运行例子 - FaceRestorationExample | ||

| - 测试图片 | ||

|  | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,37 @@ | ||

|

|

||

| ### Download the model and place it in the /models directory | ||

| - Link 1: https://github.com/mymagicpower/AIAS/releases/download/apps/face_detection.zip | ||

| - Link 2: https://github.com/mymagicpower/AIAS/releases/download/apps/face_detection.zip | ||

|

|

||

| ### Mask Detection SDK | ||

| Mask detection helps fight against pneumonia, and artificial intelligence technology is being applied to epidemic prevention and control. Wearing a mask has become one of the most important measures to cut off transmission routes in epidemic prevention. However, in practical scenarios, there are still people who do not take it seriously, do not pay attention, and have a lucky mentality, especially in public places, which poses great risks to individuals and the public. | ||

| Based on artificial intelligence, mask detection function can perform real-time detection based on camera video stream. | ||

|

|

||

| #### SDK function | ||

| - Mask detection | ||

|

|

||

| #### Running example | ||

| 1. After successful operation, the command line should see the following information: | ||

| ```text | ||

| [INFO ] - Face mask detection result image has been saved in: build/output/faces_detected.png | ||

| [INFO ] - [ | ||

| class: "MASK", probability: 0.99998, bounds: [x=0.608, y=0.603, width=0.148, height=0.265] | ||

| class: "MASK", probability: 0.99998, bounds: [x=0.712, y=0.154, width=0.129, height=0.227] | ||

| class: "NO MASK", probability: 0.99997, bounds: [x=0.092, y=0.123, width=0.066, height=0.120] | ||

| class: "NO MASK", probability: 0.99986, bounds: [x=0.425, y=0.146, width=0.062, height=0.114] | ||

| class: "MASK", probability: 0.99981, bounds: [x=0.251, y=0.671, width=0.088, height=0.193] | ||

| ] | ||

| ``` | ||

| 2. The output image effect is as follows: | ||

|  | ||

|

|

||

|

|

||

| ### Open source algorithms | ||

| #### 1. Open source algorithms used by SDK | ||

| - [PaddleDetection](https://github.com/PaddlePaddle/PaddleDetection) | ||

| - [PaddleClas](https://github.com/PaddlePaddle/PaddleClas/blob/release%2F2.2/README_ch.md) | ||

|

|

||

| #### 2. How to export the model? | ||

| - [export_model](https://github.com/PaddlePaddle/PaddleDetection/blob/release%2F2.4/tools/export_model.py) | ||

| - [how_to_create_paddlepaddle_model](http://docs.djl.ai/docs/paddlepaddle/how_to_create_paddlepaddle_model_zh.html) | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,107 @@ | ||

| ### 官网: | ||

| [官网链接](https://www.aias.top/) | ||

|

|

||

| ### 下载模型,放置于models目录 | ||

| - 链接: https://pan.baidu.com/s/1xjKfXLMmTs0iWwJ0NpDmKg?pwd=zpwa | ||

|

|

||

| ### 图片特征提取(512维)SDK | ||

| 提取图片512维特征值,并支持图片1:1特征比对,给出置信度。 | ||

|

|

||

| ### SDK功能: | ||

| #### 1. 特征提取 | ||

| 使用imagenet预训练模型resnet50,提取图片512维特征。 | ||

|

|

||

| - 运行例子 - FeatureExtractionExample | ||

| 测试图片 | ||

|  | ||

|

|

||

| - 运行成功后,命令行应该看到下面的信息: | ||

| ```text | ||

| ... | ||

| 512 | ||

| [INFO ] - [..., 0.18182503, 0.13296463, 0.22447465, 0.07165501..., 0.16957843] | ||

| ``` | ||

|

|

||

| #### 2. 图片1:1比对 | ||

| 计算图片相似度。 | ||

|

|

||

| - 运行例子 - FeatureComparisonExample | ||

| 测试图片: 左右特征对比 | ||

|  | ||

|

|

||

| - 运行成功后,命令行应该看到下面的信息: | ||

| ```text | ||

| ... | ||

| [INFO ] - 0.77396494 | ||

| ``` | ||

|

|

||

|

|

||

| ### 开源算法 | ||

| #### 1. sdk使用的开源算法 | ||

| - [sentence-transformers](https://github.com/UKPLab/sentence-transformers) | ||

| - [预训练模型](https://www.sbert.net/docs/pretrained_models.html#image-text-models) | ||

| - [安装](https://www.sbert.net/docs/installation.html) | ||

|

|

||

|

|

||

| #### 2. 模型如何导出 ? | ||

| - [how_to_convert_your_model_to_torchscript](http://docs.djl.ai/docs/pytorch/how_to_convert_your_model_to_torchscript.html) | ||

|

|

||

| - 导出CPU模型(pytorch 模型特殊,CPU&GPU模型不通用。所以CPU,GPU需要分别导出) | ||

| ```text | ||

| from sentence_transformers import SentenceTransformer, util | ||

| from PIL import Image | ||

| import torch | ||

| #Load CLIP model | ||

| model = SentenceTransformer('clip-ViT-B-32', device='cpu') | ||

| #Encode an image: | ||

| # img_emb = model.encode(Image.open('two_dogs_in_snow.jpg')) | ||

| #Encode text descriptions | ||

| # text_emb = model.encode(['Two dogs in the snow', 'A cat on a table', 'A picture of London at night']) | ||

| text_emb = model.encode(['Two dogs in the snow']) | ||

| sm = torch.jit.script(model) | ||

| sm.save("models/clip-ViT-B-32/clip-ViT-B-32.pt") | ||

| #Compute cosine similarities | ||

| # cos_scores = util.cos_sim(img_emb, text_emb) | ||

| # print(cos_scores) | ||

| ``` | ||

|

|

||

| - 导出GPU模型 | ||

| ```text | ||

| from sentence_transformers import SentenceTransformer, util | ||

| from PIL import Image | ||

| import torch | ||

| #Load CLIP model | ||

| model = SentenceTransformer('clip-ViT-B-32', device='gpu') | ||

| #Encode an image: | ||

| # img_emb = model.encode(Image.open('two_dogs_in_snow.jpg')) | ||

| #Encode text descriptions | ||

| # text_emb = model.encode(['Two dogs in the snow', 'A cat on a table', 'A picture of London at night']) | ||

| text_emb = model.encode(['Two dogs in the snow']) | ||

| sm = torch.jit.script(model) | ||

| sm.save("models/clip-ViT-B-32/clip-ViT-B-32.pt") | ||

| #Compute cosine similarities | ||

| # cos_scores = util.cos_sim(img_emb, text_emb) | ||

| # print(cos_scores) | ||

| ``` | ||

|

|

||

|

|

||

| #### 帮助文档: | ||

| - https://aias.top/guides.html | ||

| - 1.性能优化常见问题: | ||

| - https://aias.top/AIAS/guides/performance.html | ||

| - 2.引擎配置(包括CPU,GPU在线自动加载,及本地配置): | ||

| - https://aias.top/AIAS/guides/engine_config.html | ||

| - 3.模型加载方式(在线自动加载,及本地配置): | ||

| - https://aias.top/AIAS/guides/load_model.html | ||

| - 4.Windows环境常见问题: | ||

| - https://aias.top/AIAS/guides/windows.html |

Oops, something went wrong.