These are few points from an email I sent to members of the Data Science Sydney Meetup. I suppose other Kaggle beginners may find it useful.

My first steps when working on a new competition are:

- Read all the instructions carefully to understand the problem. One important thing to look at is what measure is being optimised. For example, minimising the mean absolute error (MAE) may require a different approach from minimising the mean square error (MSE).

- Read messages on the forum. Especially when joining a competition late, you can learn a lot from the problems other people had. And sometimes there’s even code to get you started (though code quality may vary and it’s not worth relying on).

- Download the data and look at it a bit to understand it better, noting any insights you may have and things you would like to try. Even if you don’t know how to model something, knowing what you want to model is half of the solution. For example, in the DSG Hackathon (predicting air quality), we noticed that even though we had to produce hourly predictions for pollutant levels, the measured levels don’t change every hour (probably due to limitations in the measuring equipment). This led us to try a simple “model” for the first few hours, where we predicted exactly the last measured value, which proved to be one of our most valuable insights. Stupid and uninspiring, but we did finish 6th :-). The main message is: look at the data!

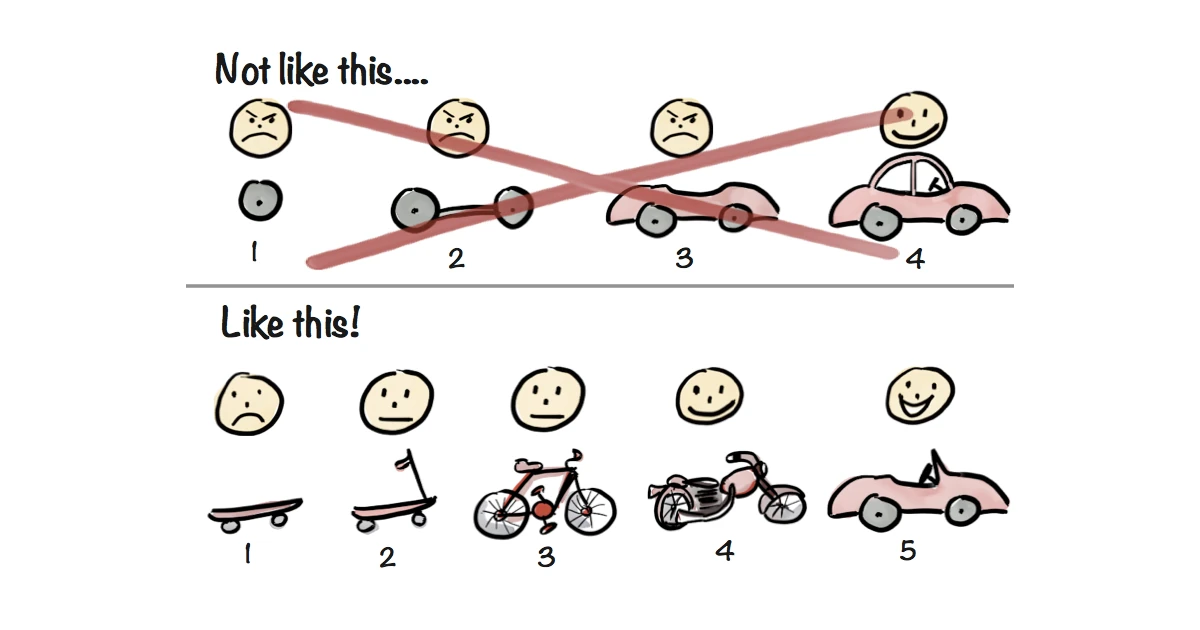

- Set up a local validation environment. This will allow you to iterate quickly without making submissions, and will increase the accuracy of your model. For those with some programming experience: local validation is your private development environment, the public leaderboard is staging, and the private leaderboard is production.

What you use for local validation depends on the type of problem. For example, for classic prediction problems you may use one of the classic cross-validation techniques. For forecasting problems, you should try and have a local setup that is as close as possible to the setup of the leaderboard. In the Yandex competition, the leaderboard is based on data from the last three days of search activity. You should use a similar split for the training data (and of course, use exactly the same local setup for all the team members so you can compare results). - Get the submission format right. Make sure that you can reproduce the baseline results locally.

Now, the way things often work is:

- You try many different approaches and ideas. Most of them lead to nothing. Hopefully some lead to something.

- Create ensembles of the various approaches.

- Repeat until you run out of time.

- Win. Hopefully.

Note that in many competitions, the differences between the top results are not statistically significant, so winning may depend on luck. But getting one of the top results also depends to a large degree on your persistence. To avoid disappointment, I think the main goal should be to learn things, so spend time trying to understand how the methods that you’re using work. Libraries like sklearn make it really easy to try a bunch of models without understanding how they work, but you’re better off trying less things and developing the ability to reason about why they work or not work.

An analogy for programmers: while you can use an array, a linked list, a binary tree, and a hash table interchangeably in some situations, understanding when to use each one can make a world of difference in terms of performance. It’s pretty similar for predictive models (though they are often not as well-behaved as data structures).

Finally, it’s worth watching this video by Phil Brierley, who won a bunch of Kaggle competitions. It’s really good, and doesn’t require much understanding of R.

Any comments are welcome!

Public comments are closed, but I love hearing from readers. Feel free to +

Public comments are closed, but I love hearing from readers. Feel free to contact me with your thoughts.

Flavio

2014-12-29 13:09:27

Hi Yanir!

I have a question.

When you say: “For example, minimising the mean absolute error (MAE) may require a different approach from minimising the mean square error (MSE).” can you explain what kind of approach (or methods, or rules of thumb) that your get to minimising MAE or MSE in machine learning?

Thanks for your time in advance!

Regards,

Flavio

Yanir

2014-12-29 21:50:13

Hi Flavio!

The optimisation approach depends on the data and method you’re using.

A basic example is when you don’t have any features, only a sample of target values. In that case, if you want to minimise the MAE you should choose the sample median, and if you want to minimise the MSE you should choose the sample mean. Here’s proof why: https://www.dropbox.com/s/b1195thcqebnxyn/mae-vs-rmse.pdf

For more complex problems, if you’re using a machine learning package you can often specify the type of loss function to minimise (see https://en.wikipedia.org/wiki/Loss_function#Selecting_a_loss_function). But even if your measure isn’t directly optimised (e.g., MAE is harder to minimise than MSE because it’s not differentiable at zero), you can always do cross-validation to find the parameters that optimise it.

I hope this helps.

Hamid Khan

2018-10-25 21:18:00

Hi Yanir!

appreciate your work! I need to know should I directly jump into machine learning algorithm, programming etc or to first master math and statistics ? I am new in this field.

Yanir Seroussi

2018-10-26 02:27:48