Open

Description

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): linux 20.04

- TensorFlow version and how it was installed (source or binary): 2.8 pip install

- TensorFlow-Addons version and how it was installed (source or binary): 1.6.1 pip install

- Python version: 3.8.10

- Is GPU used? (yes/no):yes

Describe the bug

I ran vit transformer implementations of google, and I tried to optimize it, with lamb and adam. and I get very strange results with lamb.

It seems this is a bug.

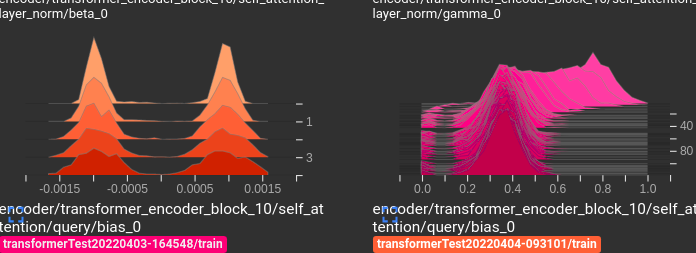

LAMB in orange, and ADAM in purple

see? the lamb on the first epoch set the distributions on 2 hills, while adam did not do such thing.

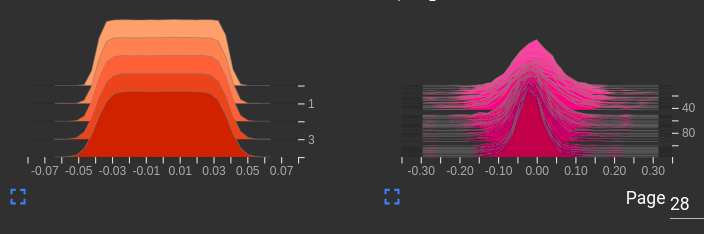

Another shape that appears a lot is this:

it seems the algorithm re-init the weight with some kind of Xavier init and keep it for some reason

Code to reproduce the issue

Optimize vit transformer of tensorflow/models.

Other info / logs

tensorboard logs are attached:

tensorboard.zip