Releases: microsoft/autogen

python-v0.4.9.3

Patch Release

This release addresses a bug in MCP Server Tool that causes error when unset tool arguments are set to None and passed on to the server. It also improves the error message from server and adds a default timeout. #6080, #6125

Full Changelog: python-v0.4.9.2...python-v0.4.9.3

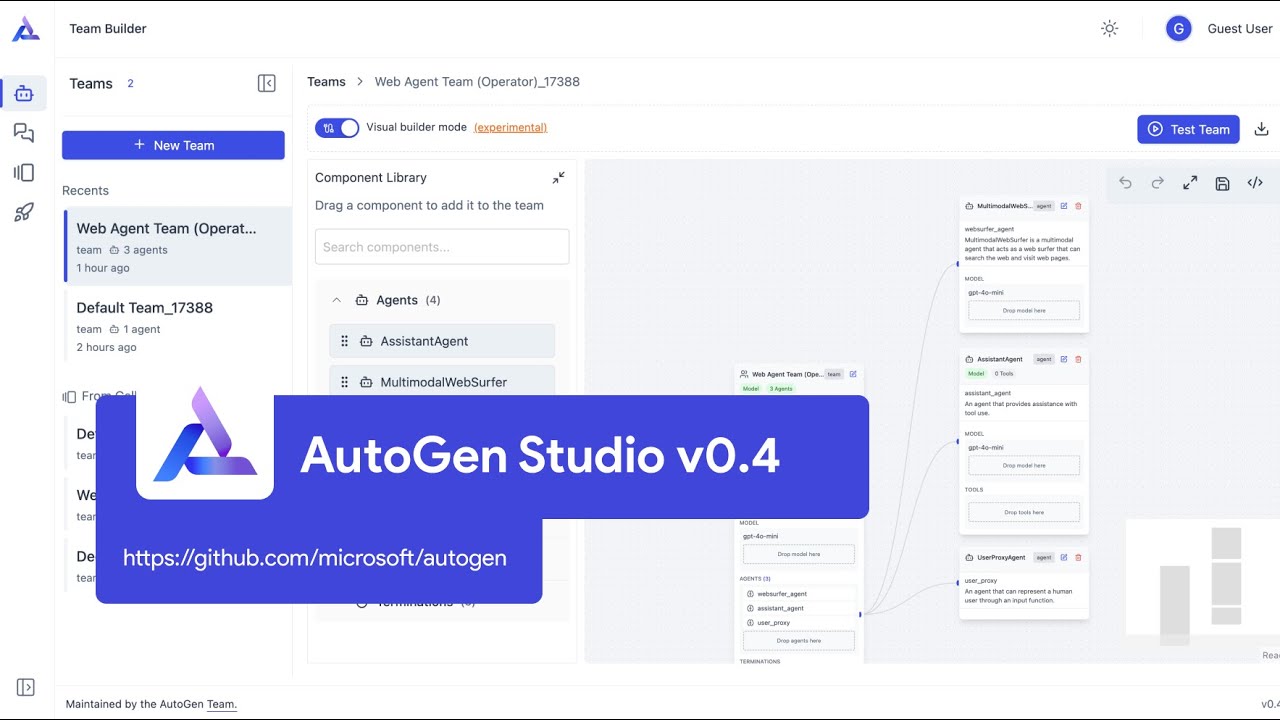

autogenstudio-v0.4.2

What's New

This release makes improvements to AutoGen Studio across multiple areas.

Component Validation and Testing

In the team builder, all component schemas are automatically validated on save. This way configuration errors (e.g., incorrect provider names) are highlighted early.

In addition, there is a test button for model clients where you can verify the correctness of your model configuration. The LLM is given a simple query and the results are shown.

Gallery Improvements

You can now modify teams, agents, models, tools, and termination conditions independently in the UI, and only review JSON when needed. The same UI panel for updating components in team builder is also reused in the Gallery. The Gallery in AGS is now persisted in a database, rather than local storage. Anthropic models supported in AGS.

Observability - LLMCallEvents

- Enable LLM Call Observability in AGS #5457

You can now view all LLMCallEvents in AGS. Go to settings (cog icon on lower left) to enable this feature.

Token Streaming

- Add Token Streaming in AGS in #5659

For better developer experience, the AGS UI will stream tokens as they are generated by an LLM for any agent where stream_model_client is set to true.

UX Improvements - Session Comparison

- AGS - Test Model Component in UI, Compare Sessions in #5963

It is often valuable, even critical, to have a side-by-side comparison of multiple agent configurations (e.g., using a team of web agents that solve tasks using a browser or agents with web search API tools). You can now do this using the compare button in the playground, which lets you select multiple sessions and interact with them to compare outputs.

Experimental Features

There are a few interesting but early features that ship with this release:

- Authentication in AGS: You can pass in an authentication configuration YAML file to enable user authentication for AGS. Currently, only GitHub authentication is supported. This lays the foundation for a multi-user environment (#5928) where various users can login and only view their own sessions. More work needs to be done to clarify isolation of resources (e.g., environment variables) and other security considerations.

See the documentation for more details.

loginags.mov

- Local Python Code Execution Tool: AGS now has early support for a local Python code execution tool. More work is needed to test the underlying agentchat implementation

Other Fixes

- Fixed issue with using AzureSQL DB as the database engine for AGS

- Fixed cascading delete issue in AGS (ensure runs are deleted when sessions are deleted) #5804 by @victordibia

- Fixed termination UI bug #5888

- Fixed DockerFile for AGS by @gunt3001 #5932

Thanks to @ekzhu , @jackgerrits , @gagb, @usag1e, @dominiclachance , @EItanya and many others for testing and feedback

python-v0.4.9.2

Patch Fixes

- Fix logging error in

SKChatCompletionAdapter#5893 - Fix missing system message in the model client call during reflect step when

reflect_on_tool_use=True#5926 (Bug introduced in v0.4.8) - Fixing listing directory error in FileSurfer #5938

Security Fixes

- Use

SecretStrtype for model clients' API key. This will ensure the secret is not exported when callingmodel_client.dump_component().model_dump_json(). #5939 and #5947. This will affectOpenAIChatCompletionClientandAzureOpenAIChatCompletionClient, andAnthropicChatCompletionClient-- the API keys will no longer be exported when you serialize the model clients. It is recommended to use environment-based or token-based authentication rather than passing the API keys around as data in configs.

Full Changelog: python-v0.4.9...python-v0.4.9.2

python-v0.4.9

What's New

Anthropic Model Client

Native support for Anthropic models. Get your update:

pip install -U "autogen-ext[anthropic]"

The new client follows the same interface as OpenAIChatCompletionClient so you can use it directly in your agents and teams.

import asyncio

from autogen_ext.models.anthropic import AnthropicChatCompletionClient

from autogen_core.models import UserMessage

async def main():

anthropic_client = AnthropicChatCompletionClient(

model="claude-3-sonnet-20240229",

api_key="your-api-key", # Optional if ANTHROPIC_API_KEY is set in environment

)

result = await anthropic_client.create([UserMessage(content="What is the capital of France?", source="user")]) # type: ignore

print(result)

if __name__ == "__main__":

asyncio.run(main())You can also load the model client directly from a configuration dictionary:

from autogen_core.models import ChatCompletionClient

config = {

"provider": "AnthropicChatCompletionClient",

"config": {"model": "claude-3-sonnet-20240229"},

}

client = ChatCompletionClient.load_component(config)To use with AssistantAgent and run the agent in a loop to match the behavior of Claude agents, you can use Single-Agent Team.

- Add anthropic docs by @victordibia in #5882

LlamaCpp Model Client

LlamaCpp is a great project for working with local models. Now we have native support via its official SDK.

pip install -U "autogen-ext[llama-cpp]"

To use a local model file:

import asyncio

from autogen_core.models import UserMessage

from autogen_ext.models.llama_cpp import LlamaCppChatCompletionClient

async def main():

llama_client = LlamaCppChatCompletionClient(model_path="/path/to/your/model.gguf")

result = await llama_client.create([UserMessage(content="What is the capital of France?", source="user")])

print(result)

asyncio.run(main())To use it with a Hugging Face model:

import asyncio

from autogen_core.models import UserMessage

from autogen_ext.models.llama_cpp import LlamaCppChatCompletionClient

async def main():

llama_client = LlamaCppChatCompletionClient(

repo_id="unsloth/phi-4-GGUF", filename="phi-4-Q2_K_L.gguf", n_gpu_layers=-1, seed=1337, n_ctx=5000

)

result = await llama_client.create([UserMessage(content="What is the capital of France?", source="user")])

print(result)

asyncio.run(main())- Feature add Add LlamaCppChatCompletionClient and llama-cpp by @aribornstein in #5326

Task-Centric Memory (Experimental)

Task-Centric memory is an experimental module that can give agents the ability to:

- Accomplish general tasks more effectively by learning quickly and continually beyond context-window limitations.

- Remember guidance, corrections, plans, and demonstrations provided by users (teachability)

- Learn through the agent's own experience and adapt quickly to changing circumstances (self-improvement)

- Avoid repeating mistakes on tasks that are similar to those previously encountered.

For example, you can use Teachability as a memory for AssistantAgent so your agent can learn from user teaching.

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.ui import Console

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_ext.experimental.task_centric_memory import MemoryController

from autogen_ext.experimental.task_centric_memory.utils import Teachability

async def main():

# Create a client

client = OpenAIChatCompletionClient(model="gpt-4o-2024-08-06", )

# Create an instance of Task-Centric Memory, passing minimal parameters for this simple example

memory_controller = MemoryController(reset=False, client=client)

# Wrap the memory controller in a Teachability instance

teachability = Teachability(memory_controller=memory_controller)

# Create an AssistantAgent, and attach teachability as its memory

assistant_agent = AssistantAgent(

name="teachable_agent",

system_message = "You are a helpful AI assistant, with the special ability to remember user teachings from prior conversations.",

model_client=client,

memory=[teachability],

)

# Enter a loop to chat with the teachable agent

print("Now chatting with a teachable agent. Please enter your first message. Type 'exit' or 'quit' to quit.")

while True:

user_input = input("\nYou: ")

if user_input.lower() in ["exit", "quit"]:

break

await Console(assistant_agent.run_stream(task=user_input))

if __name__ == "__main__":

import asyncio

asyncio.run(main())Head over to its README for details, and the samples for runnable examples.

- Task-Centric Memory by @rickyloynd-microsoft in #5227

New Sample: Gitty (Experimental)

Gitty is an experimental application built to help easing the burden on open-source project maintainers. Currently, it can generate auto reply to issues.

To use:

gitty --repo microsoft/autogen issue 5212Head over to Gitty to see details.

Improved Tracing and Logging

In this version, we made a number of improvements on tracing and logging.

- add LLMStreamStartEvent and LLMStreamEndEvent by @EItanya in #5890

- Allow for tracing via context provider by @EItanya in #5889

- Fix span structure for tracing by @ekzhu in #5853

- Add ToolCallEvent and log it from all builtin tools by @ekzhu in #5859

Powershell Support for LocalCommandLineCodeExecutor

- feat: update local code executor to support powershell by @lspinheiro in #5884

Website Accessibility Improvements

@peterychang has made huge improvements to the accessibility of our documentation website. Thank you @peterychang!

- word wrap prev/next links on autodocs by @peterychang in #5867

- Allow Voice Access to find clickable cards by @peterychang in #5857

- copy tooltip on focus. Upgrade PDT version by @peterychang in #5848

- highlight focused code output boxes in jupyter notebook pages by @peterychang in #5819

- Fix high contrast mode focus by @peterychang in #5796

- Keyboard copy event and search bar cancellation by @peterychang in #5820

Bug Fixes

- fix: save_state should not require the team to be stopped. by @ekzhu in #5885

- fix: remove max_tokens from az ai client create call when stream=True by @ekzhu in #5860

- fix: add plugin to kernel by @lspinheiro in #5830

- fix: warn when using reflection on tool use with Claude models by @ekzhu in #5829

Other Python Related Changes

- doc: update termination tutorial to include FunctionCallTermination condition and fix formatting by @ekzhu in #5813

- docs: Add note recommending PythonCodeExecutionTool as an alternative to CodeExecutorAgent by @ekzhu in #5809

- Update quickstart.ipynb by @taswar in #5815

- Fix warning in selector gorup chat guide by @ekzhu in #5849

- Support for external agent runtime in AgentChat by @ekzhu in #5843

- update ollama usage docs by @ekzhu in #5854

- Update markitdown requirements to >= 0.0.1, while still in the 0.0.x range by @afourney in #5864

- Add client close by @afourney in #5871

- Update README to clarify Web Browsing Agent Team usage, and use animated Chromium browser by @ekzhu in #5861

- Add author name before their message in Chainlit team sample by @DavidYu00 in #5878

- Bump axios from 1.7.9 to 1.8.2 in /python/packages/autogen-studio/frontend by @dependabot in #5874

- Add an optional base path to FileSurfer by @husseinmozannar in #5886

- feat: Pause and Resume for AgentChat Teams and Agents by @ekzhu in #5887

- update version to v0.4.9 by @ekzhu in #5903

New Contributors

- @taswar made their first contribution in #5815

- @DavidYu00 made their first contribution in #5878

- @aribornstein made their first contribution in #5326

**Full Chang...

python-v0.4.8.2

Patch Fixes

- fix: Remove

max_tokens=20fromAzureAIChatCompletionClient.create_stream's create call whenstream=True#5860 - fix: Add

close()method to built-in model clients to ensure the async event loop is closed when program exits. This should fix the "ResourceWarning: unclosed transport when importing web_surfer" errors. #5871

Full Changelog: python-v0.4.8.1...python-v0.4.8.2

python-v0.4.8.1

Patch fixes to v0.4.8:

- Fixing SKChatCompletionAdapter bug that disabled tool use #5830

Full Changelog: python-v0.4.8...python-v0.4.8.1

python-v0.4.8

What's New

Ollama Chat Completion Client

To use the new Ollama Client:

pip install -U "autogen-ext[ollama]"

from autogen_ext.models.ollama import OllamaChatCompletionClient

from autogen_core.models import UserMessage

ollama_client = OllamaChatCompletionClient(

model="llama3",

)

result = await ollama_client.create([UserMessage(content="What is the capital of France?", source="user")]) # type: ignore

print(result)To load a client from configuration:

from autogen_core.models import ChatCompletionClient

config = {

"provider": "OllamaChatCompletionClient",

"config": {"model": "llama3"},

}

client = ChatCompletionClient.load_component(config)It also supports structured output:

from autogen_ext.models.ollama import OllamaChatCompletionClient

from autogen_core.models import UserMessage

from pydantic import BaseModel

class StructuredOutput(BaseModel):

first_name: str

last_name: str

ollama_client = OllamaChatCompletionClient(

model="llama3",

response_format=StructuredOutput,

)

result = await ollama_client.create([UserMessage(content="Who was the first man on the moon?", source="user")]) # type: ignore

print(result)- Ollama client by @peterychang in #5553

- Fix ollama docstring by @peterychang in #5600

- Ollama client docs by @peterychang in #5605

New Required name Field in FunctionExecutionResult

Now name field is required in FunctionExecutionResult:

exec_result = FunctionExecutionResult(call_id="...", content="...", name="...", is_error=False)- fix: Update SKChatCompletionAdapter message conversion by @lspinheiro in #5749

Using thought Field in CreateResult and ThoughtEvent

Now CreateResult uses the optional thought field for the extra text content generated as part of a tool call from model. It is currently supported by OpenAIChatCompletionClient.

When available, the thought content will be emitted by AssistantAgent as a ThoughtEvent message.

- feat: Add thought process handling in tool calls and expose ThoughtEvent through stream in AgentChat by @ekzhu in #5500

New metadata Field in AgentChat Message Types

Added a metadata field for custom message content set by applications.

- Add metadata field to basemessage by @husseinmozannar in #5372

Exception in AgentChat Agents is now fatal

Now, if there is an exception raised within an AgentChat agent such as the AssistantAgent, instead of silently stopping the team, it will raise the exception.

- fix: Allow background exceptions to be fatal by @jackgerrits in #5716

New Termination Conditions

New termination conditions for better control of agents.

See how you use TextMessageTerminationCondition to control a single agent team running in a loop: https://microsoft.github.io/autogen/stable/user-guide/agentchat-user-guide/tutorial/teams.html#single-agent-team.

FunctionCallTermination is also discussed as an example for custom termination condition: https://microsoft.github.io/autogen/stable/user-guide/agentchat-user-guide/tutorial/termination.html#custom-termination-condition

- TextMessageTerminationCondition for agentchat by @EItanya in #5742

- FunctionCallTermination condition by @ekzhu in #5808

Docs Update

The ChainLit sample contains UserProxyAgent in a team, and shows you how to use it to get user input from UI. See: https://github.com/microsoft/autogen/tree/main/python/samples/agentchat_chainlit

- doc & sample: Update documentation for human-in-the-loop and UserProxyAgent; Add UserProxyAgent to ChainLit sample; by @ekzhu in #5656

- docs: Add logging instructions for AgentChat and enhance core logging guide by @ekzhu in #5655

- doc: Enrich AssistantAgent API documentation with usage examples. by @ekzhu in #5653

- doc: Update SelectorGroupChat doc on how to use O3-mini model. by @ekzhu in #5657

- update human in the loop docs for agentchat by @victordibia in #5720

- doc: update guide for termination condition and tool usage by @ekzhu in #5807

- Add examples for custom model context in AssistantAgent and ChatCompletionContext by @ekzhu in #5810

Bug Fixes

- Initialize BaseGroupChat before reset by @gagb in #5608

- fix: Remove R1 model family from is_openai function by @ekzhu in #5652

- fix: Crash in argument parsing when using Openrouter by @philippHorn in #5667

- Fix: Add support for custom headers in HTTP tool requests by @linznin in #5660

- fix: Structured output with tool calls for OpenAIChatCompletionClient by @ekzhu in #5671

- fix: Allow background exceptions to be fatal by @jackgerrits in #5716

- Fix: Auto-Convert Pydantic and Dataclass Arguments in AutoGen Tool Calls by @mjunaidca in #5737

Other Python Related Changes

- Update website version by @ekzhu in #5561

- doc: fix typo (recpients -> recipients) by @radamson in #5570

- feat: enhance issue templates with detailed guidance by @ekzhu in #5594

- Improve the model mismatch warning msg by @thinkall in #5586

- Fixing grammar issues by @OndeVai in #5537

- Fix typo in doc by @weijen in #5628

- Make ChatCompletionCache support component config by @victordibia in #5658

- DOCS: Minor updates to handoffs.ipynb by @xtophs in #5665

- DOCS: Fixed small errors in the text and made code format more consistent by @xtophs in #5664

- Replace the undefined tools variable with tool_schema parameter in ToolUseAgent class by @shuklaham in #5684

- Improve readme inconsistency by @gagb in #5691

- update versions to 0.4.8 by @ekzhu in #5689

- Update issue templates by @jackgerrits in #5686

- Change base image to one with arm64 support by @jackgerrits in #5681

- REF: replaced variable name in TextMentionTermination by @pengjunfeng11 in #5698

- Refactor AssistantAgent on_message_stream by @lspinheiro in #5642

- Fix accessibility issue 14 for visual accessibility by @peterychang in #5709

- Specify specific UV version should be used by @jackgerrits in #5711

- Update README.md for improved clarity and formatting by @gagb in #5714

- add anthropic native support by @victordibia in #5695

- 5663 ollama client host by @rylativity in #5674

- Fix visual accessibility issues 6 and 20 by @peterychang in #5725

- Add Serialization Instruction for MemoryContent by @victordibia in #5727

- Fix typo by @stuartleeks in #5754

- Add support for default model client, in AGS updates to settings UI by @victordibia in #5763

- fix incorrect field name from config to component by @peterj in #5761

- Make FileSurfer and CodeExecAgent Declarative by @victordibia in #5765

- docs: add note about markdown code block requirement in CodeExecutorA… by @jay-thakur in #5785

- add options to ollama client by @peterychang in #5805

- add stream_options to openai model by @peterj in #5788

- add api docstring to with_requirements by @victordibia in #5746

- Update with correct message types by @laurentran in #5789

- Update installation.md by @LuSrackhall in #5784

- Update magentic-one.md by @Paulhb7 in #5779

- Add ChromaDBVectorMemory in Extensions by @victordibia in #5308

N...

python-v0.4.7

Overview

This release contains various bug fixes and feature improvements for the Python API.

Related news: our .NET API website is up and running: https://microsoft.github.io/autogen/dotnet/dev/. Our .NET Core API now has dev releases. Check it out!

Important

Starting from v0.4.7, ModelInfo's required fields will be enforced. So please include all required fields when you use model_info when creating model clients. For example,

from autogen_core.models import UserMessage

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(

model="llama3.2:latest",

base_url="http://localhost:11434/v1",

api_key="placeholder",

model_info={

"vision": False,

"function_calling": True,

"json_output": False,

"family": "unknown",

},

)

response = await model_client.create([UserMessage(content="What is the capital of France?", source="user")])

print(response)See ModelInfo for more details.

New Features

- DockerCommandLineCodeExecutor support for additional volume mounts, exposed host ports by @andrejpk in #5383

- Remove and get subscription APIs for Python GrpcWorkerAgentRuntime by @jackgerrits in #5365

- Add

strictmode support toBaseTool,ToolSchemaandFunctionToolto allow tool calls to be used together with structured output mode by @ekzhu in #5507 - Make CodeExecutor components serializable by @victordibia in #5527

Bug Fixes

- fix: Address tool call execution scenario when model produces empty tool call ids by @ekzhu in #5509

- doc & fix: Enhance AgentInstantiationContext with detailed documentation and examples for agent instantiation; Fix a but that caused value error when the expected class is not provided in register_factory by @ekzhu in #5555

- fix: Add model info validation and improve error messaging by @ekzhu in #5556

- fix: Add warning and doc for Windows event loop policy to avoid subprocess issues in web surfer and local executor by @ekzhu in #5557

Doc Updates

- doc: Update API doc for MCP tool to include installation instructions by @ekzhu in #5482

- doc: Update AgentChat quickstart guide to enhance clarity and installation instructions by @ekzhu in #5499

- doc: API doc example for langchain database tool kit by @ekzhu in #5498

- Update Model Client Docs to Mention API Key from Environment Variables by @victordibia in #5515

- doc: improve tool guide in Core API doc by @ekzhu in #5546

Other Python Related Changes

- Update website version v0.4.6 by @ekzhu in #5481

- Reduce number of doc jobs for old releases by @jackgerrits in #5375

- Fix class name style in document by @weijen in #5516

- Update custom-agents.ipynb by @yosuaw in #5531

- fix: update 0.2 deployment workflow to use tag input instead of branch by @ekzhu in #5536

- fix: update help text for model configuration argument by @gagb in #5533

- Update python version to v0.4.7 by @ekzhu in #5558

New Contributors

Full Changelog: python-v0.4.6...python-v0.4.7

python-v0.4.6

Features and Improvements

MCP Tool

In this release we added a new built-in tool by @richard-gyiko for using Model Context Protocol (MCP) servers. MCP is an open protocol that allows agents to tap into an ecosystem of tools, from browsing file system to Git repo management.

Here is an example of using the mcp-server-fetch tool for fetching web content as Markdown.

# pip install mcp-server-fetch autogen-ext[mcp]

import asyncio

from autogen_agentchat.agents import AssistantAgent

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_ext.tools.mcp import StdioServerParams, mcp_server_tools

async def main() -> None:

# Get the fetch tool from mcp-server-fetch.

fetch_mcp_server = StdioServerParams(command="uvx", args=["mcp-server-fetch"])

tools = await mcp_server_tools(fetch_mcp_server)

# Create an agent that can use the fetch tool.

model_client = OpenAIChatCompletionClient(model="gpt-4o")

agent = AssistantAgent(name="fetcher", model_client=model_client, tools=tools, reflect_on_tool_use=True) # type: ignore

# Let the agent fetch the content of a URL and summarize it.

result = await agent.run(task="Summarize the content of https://en.wikipedia.org/wiki/Seattle")

print(result.messages[-1].content)

asyncio.run(main())- Add MCP adapters to autogen-ext by @richard-gyiko in #5251

HTTP Tool

In this release we introduce a new built-in tool built by @EItanya for querying HTTP-based API endpoints. This lets agent call remotely hosted tools through HTTP.

Here is an example of using the httpbin.org API for base64 decoding.

# pip install autogen-ext[http-tool]

import asyncio

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.messages import TextMessage

from autogen_core import CancellationToken

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_ext.tools.http import HttpTool

# Define a JSON schema for a base64 decode tool

base64_schema = {

"type": "object",

"properties": {

"value": {"type": "string", "description": "The base64 value to decode"},

},

"required": ["value"],

}

# Create an HTTP tool for the httpbin API

base64_tool = HttpTool(

name="base64_decode",

description="base64 decode a value",

scheme="https",

host="httpbin.org",

port=443,

path="/base64/{value}",

method="GET",

json_schema=base64_schema,

)

async def main():

# Create an assistant with the base64 tool

model = OpenAIChatCompletionClient(model="gpt-4")

assistant = AssistantAgent("base64_assistant", model_client=model, tools=[base64_tool])

# The assistant can now use the base64 tool to decode the string

response = await assistant.on_messages(

[TextMessage(content="Can you base64 decode the value 'YWJjZGU=', please?", source="user")],

CancellationToken(),

)

print(response.chat_message.content)

asyncio.run(main())MagenticOne Improvement

We introduced several improvements to MagenticOne (M1) and its agents. We made M1 work with text-only models that can't read screenshots, and prompt changes to make it work better with smaller models.

Do you know now you can configure m1 CLI tool with a YAML configuration file?

- WebSurfer: print viewport text by @afourney in #5329

- Allow m1 cli to read a configuration from a yaml file. by @afourney in #5341

- Add text-only model support to M1 by @afourney in #5344

- Ensure decriptions appear each on one line. Fix web_surfer's desc by @afourney in #5390

- Prompting changes to better support smaller models. by @afourney in #5386

- doc: improve m1 docs, remove duplicates by @ekzhu in #5460

- M1 docker by @afourney in #5437

SelectorGroupChat Improvement

In this release we made several improvements to make SelectorGroupChat work well with smaller models such as LLama 13B, and hosted models that do not support the name field in Chat Completion messages.

Do you know you can use models served through Ollama directly through the OpenAIChatCompletionClient? See: https://microsoft.github.io/autogen/stable/user-guide/agentchat-user-guide/tutorial/models.html#ollama

- Get SelectorGroupChat working for Llama models. by @afourney in #5409

- Mitigates #5401 by optionally prepending names to messages. by @afourney in #5448

- fix: improve speaker selection in SelectorGroupChat for weaker models by @ekzhu in #5454

Gemini Model Client

We enhanced our support for Gemini models. Now you can use Gemini models without passing in model_info and base_url.

from autogen_core.models import UserMessage

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(

model="gemini-1.5-flash-8b",

# api_key="GEMINI_API_KEY",

)

response = await model_client.create([UserMessage(content="What is the capital of France?", source="user")])

print(response)- feat: add gemini model families, enhance group chat selection for Gemini model and add tests by @ekzhu in #5334

- feat: enhance Gemini model support in OpenAI client and tests by @ekzhu in #5461

AGBench Update

New Sample

Interested in integration with FastAPI? We have a new sample: https://github.com/microsoft/autogen/blob/main/python/samples/agentchat_fastapi

- Add sample chat application with FastAPI by @ekzhu in #5433

- docs: enhance human-in-the-loop tutorial with FastAPI websocket example by @ekzhu in #5455

Bug Fixes

- Fix reading string args from m1 cli by @afourney in #5343

- Fix summarize_page in a text-only context, and for unknown models. by @afourney in #5388

- fix: warn on empty chunks, don't error out by @MohMaz in #5332

- fix: add state management for oai assistant by @lspinheiro in #5352

- fix: streaming token mode cannot work in function calls and will infi… by @so2liu in #5396

- fix: do not count agent event in MaxMessageTermination condition by @ekzhu in #5436

- fix: remove sk tool adapter plugin name by @lspinheiro in #5444

- fix & doc: update selector prompt documentation and remove validation checks by @ekzhu in #5456

- fix: update SK adapter stream tool call processing. by @lspinheiro in #5449

- fix: Update SK kernel from tool to use method. by @lspinheiro in #5469

Other Python Changes

- Update Python website to v0.4.5 by @ekzhu in #5316

- Adding o3 family: o3-mini by @razvanvalca in #5325

- Ensure ModelInfo field is serialized for OpenAIChatCompletionClient by @victordibia in #5315

- docs(core_distributed-group-chat): fix the typos in the docs in the README.md by @jsburckhardt in #5347

- Assistant agent drop images when not provided with a vision-capable model. by @afourney in #5351

- docs(python): add instructions for syncing dependencies and checking samples by @ekzhu in #5362

- Fix typo by @weijen in #5361

- docs: add blog link to README for updates and resources by @gagb in #5368

- Memory component base by @EItanya in #5380

- Fixed example code in doc:Custom Agents by @weijen in #5381

- Various web surfer fixes. by @afourney in #5393

- Refactor grpc channel connection in servicer by @jackgerrits in #5402

- Updates to proto for state apis by @jackgerrits in #5407

- feat: add integration workflow for testing multiple packages by @ekzhu in #5412

- Flush console output after every message. by @afourney in #5415

- Use a root json element instead of dict by @jackgerrits in #5430

- Split out GRPC tests by @jackgerrits in #5431

- feat: enhance AzureAIChatCompletionClient validation and add unit tests by @ekzhu in #5417

- Fix typo in Swarm doc by @weijen in #5435

- Update teams.ipynb : In the sample code the termination condition is set to the text "APPROVE" but the documentation mentions "TERMIN...

autogenstudio-v0.4.1

Whats New

AutoGen Studio Declarative Configuration

- in #5172, you can now build your agents in python and export to a json format that works in autogen studio

AutoGen studio now used the same declarative configuration interface as the rest of the AutoGen library. This means you can create your agent teams in python and then dump_component() it into a JSON spec that can be directly used in AutoGen Studio! This eliminates compatibility (or feature inconsistency) errors between AGS/AgentChat Python as the exact same specs can be used across.

See a video tutorial on AutoGen Studio v0.4 (02/25) - https://youtu.be/oum6EI7wohM

Here's an example of an agent team and how it is converted to a JSON file:

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_agentchat.conditions import TextMentionTermination

agent = AssistantAgent(

name="weather_agent",

model_client=OpenAIChatCompletionClient(

model="gpt-4o-mini",

),

)

agent_team = RoundRobinGroupChat([agent], termination_condition=TextMentionTermination("TERMINATE"))

config = agent_team.dump_component()

print(config.model_dump_json()){

"provider": "autogen_agentchat.teams.RoundRobinGroupChat",

"component_type": "team",

"version": 1,

"component_version": 1,

"description": "A team that runs a group chat with participants taking turns in a round-robin fashion\n to publish a message to all.",

"label": "RoundRobinGroupChat",

"config": {

"participants": [

{

"provider": "autogen_agentchat.agents.AssistantAgent",

"component_type": "agent",

"version": 1,

"component_version": 1,

"description": "An agent that provides assistance with tool use.",

"label": "AssistantAgent",

"config": {

"name": "weather_agent",

"model_client": {

"provider": "autogen_ext.models.openai.OpenAIChatCompletionClient",

"component_type": "model",

"version": 1,

"component_version": 1,

"description": "Chat completion client for OpenAI hosted models.",

"label": "OpenAIChatCompletionClient",

"config": { "model": "gpt-4o-mini" }

},

"tools": [],

"handoffs": [],

"model_context": {

"provider": "autogen_core.model_context.UnboundedChatCompletionContext",

"component_type": "chat_completion_context",

"version": 1,

"component_version": 1,

"description": "An unbounded chat completion context that keeps a view of the all the messages.",

"label": "UnboundedChatCompletionContext",

"config": {}

},

"description": "An agent that provides assistance with ability to use tools.",

"system_message": "You are a helpful AI assistant. Solve tasks using your tools. Reply with TERMINATE when the task has been completed.",

"model_client_stream": false,

"reflect_on_tool_use": false,

"tool_call_summary_format": "{result}"

}

}

],

"termination_condition": {

"provider": "autogen_agentchat.conditions.TextMentionTermination",

"component_type": "termination",

"version": 1,

"component_version": 1,

"description": "Terminate the conversation if a specific text is mentioned.",

"label": "TextMentionTermination",

"config": { "text": "TERMINATE" }

}

}

}Note: If you are building custom agents and want to use them in AGS, you will need to inherit from the AgentChat BaseChat agent and Component class.

Note: This is a breaking change in AutoGen Studio. You will need to update your AGS specs for any teams created with version autogenstudio <0.4.1

Ability to Test Teams in Team Builder

- in #5392, you can now test your teams as you build them. No need to switch between team builder and playground sessions to test.

You can now test teams directly as you build them in the team builder UI. As you edit your team (either via drag and drop or by editing the JSON spec)

New Default Agents in Gallery (Web Agent Team, Deep Research Team)

- in #5416, adds an implementation of a Web Agent Team and Deep Research Team in the default gallery.

The default gallery now has two additional default agents that you can build on and test:

- Web Agent Team - A team with 3 agents - a Web Surfer agent that can browse the web, a Verification Assistant that verifies and summarizes information, and a User Proxy that provides human feedback when needed.

- Deep Research Team - A team with 3 agents - a Research Assistant that performs web searches and analyzes information, a Verifier that ensures research quality and completeness, and a Summary Agent that provides a detailed markdown summary of the research as a report to the user.

Other Improvements

Older features that are currently possible in v0.4.1

- Real-time agent updates streaming to the frontend

- Run control: You can now stop agents mid-execution if they're heading in the wrong direction, adjust the team, and continue

- Interactive feedback: Add a UserProxyAgent to get human input through the UI during team runs

- Message flow visualization: See how agents communicate with each other

- Ability to import specifications from external galleries

- Ability to wrap agent teams into an API using the AutoGen Studio CLI

To update to the latest version:

pip install -U autogenstudioOverall roadmap for AutoGen Studion is here #4006 .

Contributions welcome!