import urllib

def get_page(url): # This is a simulated get_page procedure so that you can test your # code on two pages "http://xkcd.com/353" and "http://xkcd.com/554". # A procedure which actually grabs a page from the web will be # introduced in unit 4. page = urllib.urlopen(url).read() #print page return page

''' try: if url == "http://xkcd.com/353": return ' <title>xkcd: Python</title>

Permanent link to this comic: http://xkcd.com/353/

Image URL (for hotlinking/embedding): http://imgs.xkcd.com/comics/python.png

XKCD updates every Monday, Wednesday, and Friday.

XKCD updates every Monday, Wednesday, and Friday. Blag: Remember geohashing? Something pretty cool happened Sunday.

Permanent link to this comic: http://xkcd.com/554/

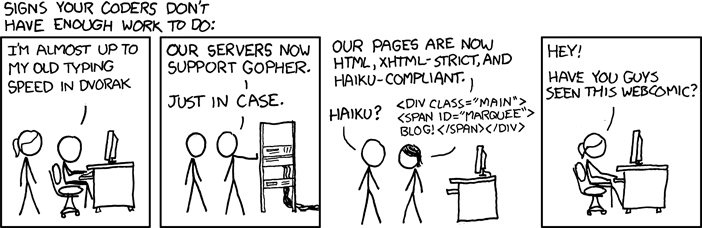

Image URL (for hotlinking/embedding): http://imgs.xkcd.com/comics/not_enough_work.png

def get_next_target(page): start_link = page.find('<a href=') if start_link == -1: return None, 0 start_quote = page.find('"', start_link) end_quote = page.find('"', start_quote + 1) url = page[start_quote + 1:end_quote] #print "url ->>>> " #print url #print "end_quote -->>>>" #print end_quote return url, end_quote

def union(p,q): for e in q: if e not in p: p.append(e) return p

def get_all_links(page): links = [] while True: url,endpos = get_next_target(page) if url: links.append(url) page = page[endpos:] else: break #print "LINKS ->>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>" #print links return links

def crawl_web(seed): print seed tocrawl = [seed] crawled = [] while tocrawl: page = tocrawl.pop() if page not in crawled: union(tocrawl, get_all_links(get_page(page))) crawled.append(page) print "TO CRAWLE ->>>>>>>>>" print tocrawl print "CRAWLED ->>>>>>>>>>>" print crawled return crawled

url = "http://www.iramykytyn.com/blog" page = urllib.urlopen(url).read() #print page links = crawl_web(url) print links