-

Notifications

You must be signed in to change notification settings - Fork 22

Open

Description

Hi,

I have used this repo to build our current drft solution for our team.

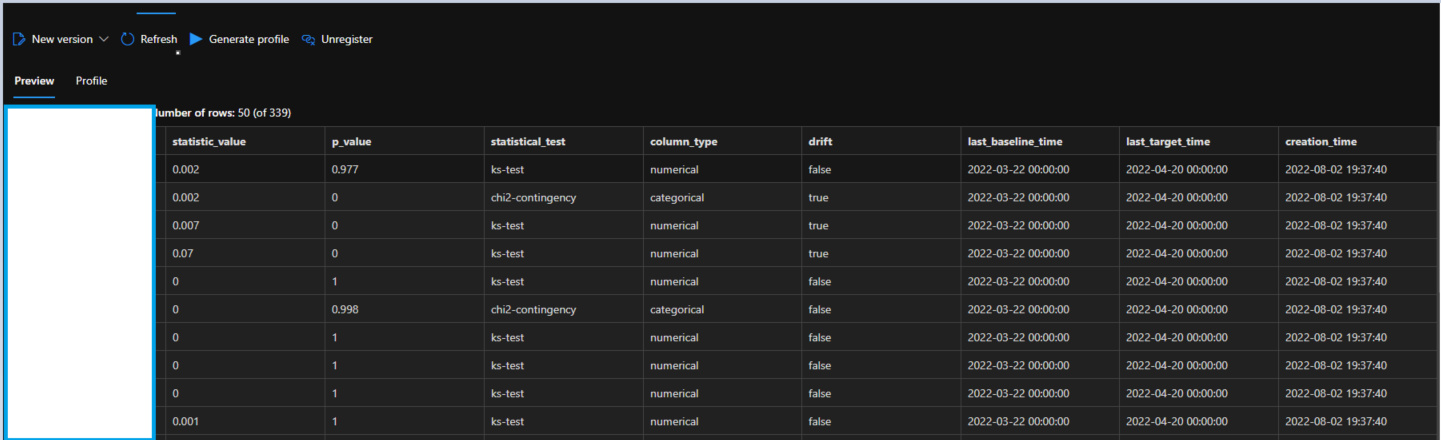

I am historizing the dataset using an azure ml tabular dataset by passing the path of my drift loadings in blob. This allows me to query the data over time and plot how the p-values are varying.

However in order to detect if we have drift or not in the overall dataset it is unfair to just look to one feature.

I am using a bonferroni correction: Bland JM, Altman DG: Multiple significance tests: The Bonferroni method. BMJ 1995;310(6973):170.

In the same way that it is implemented in Seldon Core:

# TODO: return both feature-level and batch-level drift predictions by default

# values below p-value threshold are drift

if drift_type == 'feature':

drift_pred = (p_vals < self.p_val).astype(int)

elif drift_type == 'batch' and self.correction == 'bonferroni':

threshold = self.p_val / self.n_features

drift_pred = int((p_vals < threshold).any()) # type: ignore[assignment]

elif drift_type == 'batch' and self.correction == 'fdr':

drift_pred, threshold = fdr(p_vals, q_val=self.p_val) # type: ignore[assignment]

else:

raise ValueError('`drift_type` needs to be either `feature` or `batch`.')Maybe something worth to add to the example!

Metadata

Metadata

Assignees

Labels

No labels